9 Biology & Bioinformatics

9.1 R in Biology

R became popular in biological data analysis in the early to mid 2000s, when microarray technology came into widespread use enabling researchers to look for statistical differences in gene expression for thousands of genes across large numbers of samples. As a result of this popularity, a community of biological researchers and data analysts created a collection of software packages called Bioconductor, which made a vast array of cutting edge statistical and bioinformatic methodologies widely available.

Due to its ease of use, widespread community support, rich ecosystem of biological data analysis packages, and to it being free, R remains one of the primary languages of biological data analysis. The language’s early focus on statistical analysis, and later transition to data science in general, makes it very useful and accessible to perform the kinds of analyses the new data science of biology required. It is also a bridge between biologists without a computational background and statisticians and bioinformaticians, who invent new methods and implement them as R packages that are easily accessible by all. The language and the package ecosystems and communities it supports continue to be a major source of biological discovery and innovation.

As a data science, biology benefits from the main strengths of R and the tidyverse when combined with the powerful analytical techniques available in Bioconductor packages, namely to manipulate, visualize, analyze, and communicate biological data.

9.2 Biological Data Overview

9.2.1 Types of Biological Data

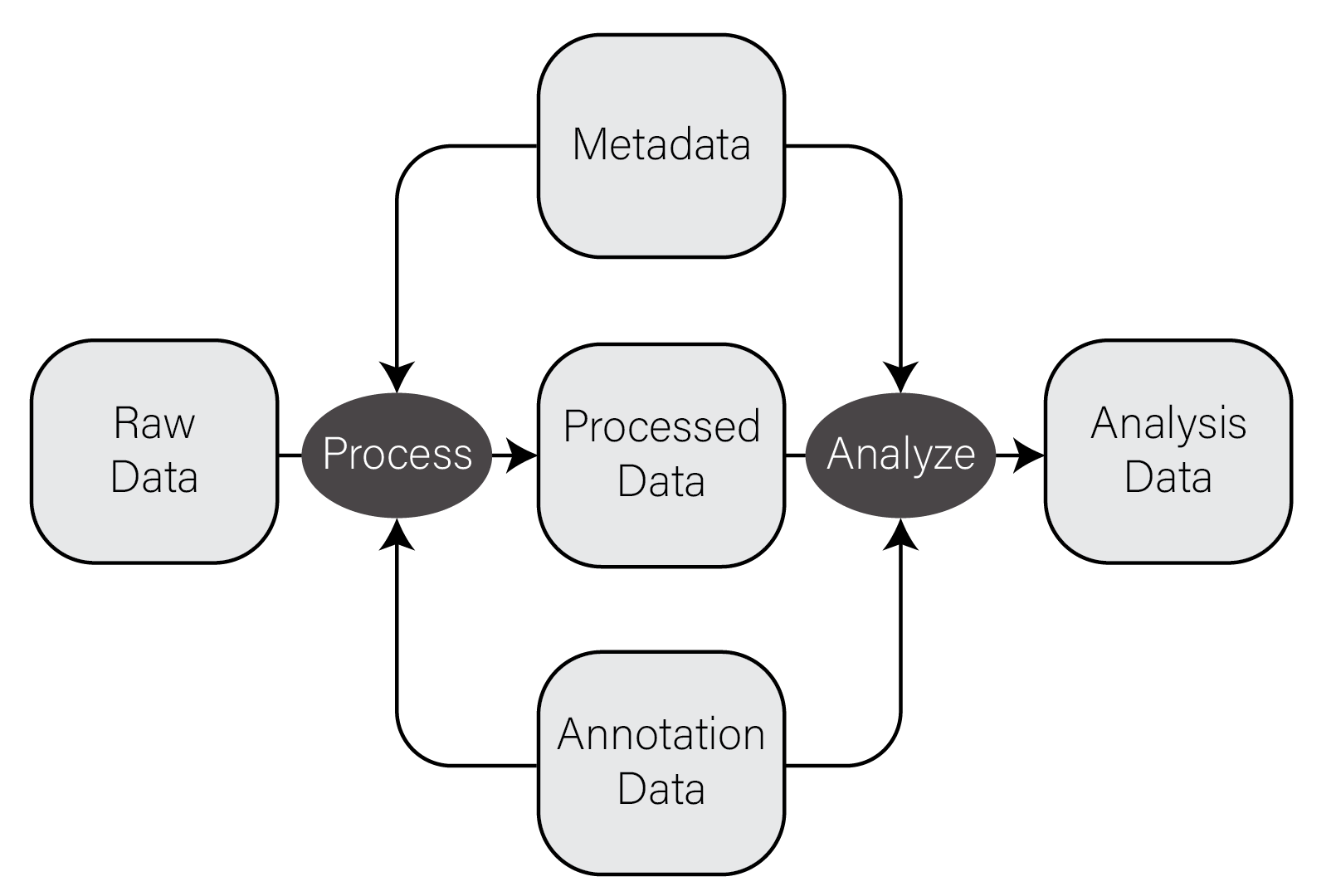

In very general terms, there are five types of data used in biological data analysis: raw/primary data, processed data, analysis results, metadata, and annotation data.

Raw/primary data. These data are the primary observations made by whatever instruments/techniques we are using for our experiment. These may include high-throughput sequencing data, mass/charge ratio data from mass spectrometry, 16S rRNA sequencing data from metagenomic studies, SNPs from genotyping assays, etc. These data can be very large and are often not efficiently processed using R. Instead, specialized tools built outside of R are used to first process the primary data into a form that is amenable to analysis. The most common primary biological data types include Microarrays, High Throughput Sequencing data, and mass spectrometry data.

Processed data. Processed data is the result of any analysis or transformation of primary data into an intermediate, more interpretable form. For example, when performing gene expression analysis with RNASeq, short reads that form the primary data are typically aligned against a genome and then counted against annotated genes, resulting in a count for each gene in the genome. Typically, these counts are then combined for many samples into a single matrix and subject to downstream analyses like differential expression.

Analysis results. Analysis results aren’t data per se, but are the results of analysis of primary data or processed results. For example, in gene expression studies, a first analysis is often differential expression, where each gene in the genome is tested for expression associated with some outcome of interest across many samples. Each gene then has a number of statistics and related values, like log2 fold change, nominal and adjusted p-value, etc. These forms of data are usually what we use to form interpretations of our datasets and therefore we must manipulate them in much the same way as any other dataset.

Metadata. In most experimental settings, multiple samples have been processed and had the same primary data type collected. These samples also have information associated with them, which is here termed metadata, or “data about data”. In our gene expression of post mortem brain experiments mentioned above, the information about the individuals themselves, including age at death, whether the person had a disease, the measurements of tissue quality, etc. is the metadata. The primary and processed data and metadata are usually stored in different files, where the metadata (or sample information or sample data, etc) will have one column indicating the unique identifier (ID) of each sample. The processed data will typically have columns named for each of the sample IDs.

Annotation data. A tremendous amount of knowledge has been generated about biological entities, e.g. genes, especially since the publication of the human genome. Annotation data is publicly available information about the features we measure in our experiments. This may come in the form of the coordinates in a genome where genes exist, any known functions of those genes, the domains found in proteins and their relative sequence, gene identifier cross references across different gene naming systems (e.g. symbol vs Ensembl ID), single nucleotide polymorphism genomic locations and associations with traits or diseases, etc. This is information that we use to aid in interpretation of our experimental data but generally do not generate ourselves. Annotation data comes in many forms, some of which are in CSV format.

The figure below contains a simple diagram of how these different types of data work together for a typical biological data analysis.

9.2.2 CSV Files

The most common, convenient, and flexible data file format in biology and

bioinformatics is the character-delimited or character-separated text file.

These files are plain text files (i.e. not the native file format of any

specific program, like Excel) that generally contain rectangles of data. When

formatted correctly, you can think of these files as containing a grid or matrix

of data values with some number of columns, each of which has the same number of

values. Each line of these files has some number of data values separated by a

consistent character, most commonly the comma which are called comma-separated

value, or “CSV”, files

and filenames typically end with the extension .csv. Note that

other characters, especially the

id,somevalue,category,genes

1,2.123,A,APOE

4,5.123,B,"HOXA1,HOXB1"

7,8.123,,SNCASome properties and principles of CSV files:

- The first line often but not always contains the column names of each column

- Each value is delimited by the same character, in this case

, - Values can be any value, including numbers and characters

- When a value contains the delimiting character (e.g. HOXA1,HOXB1 contains a

,), the value is wrapped in double quotes - Values can be missing, indicated by sequential delimiters (i.e.

,,or one,at the end of the line, if the last column value is missing) - There is no delimiter at the end of the lines

- To be well-formatted every line must have the same number of delimited values

These same properties and principles apply to all character-separated files, regardless of the specific delimiter used. If a file follows these principles, they can be loaded very easily into R or any other data analysis setting. ### Common Biological Data Matrices

Typically the first data set you will work with in R is processed data as described in the previous section. This data has been transformed from primary data in some way such that it (usually) forms a numeric matrix with features as rows and samples as columns. The first column of these files usually contains a feature identifier, e.g. gene identifier, genomic locus, probe set ID, etc and the remaining columns have numerical values, one per sample. The first row is usually column names for all the columns in the file. Below is an example of one of these files from a microarray gene expression dataset loaded into R:

intensities <- read_csv("example_intensity_data.csv")

intensities

# A tibble: 54,675 x 36

probe GSM972389 GSM972390 GSM972396 GSM972401 GSM972409 GSM972412 GSM972413 GSM972422 GSM972429

<chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 1007_s_at 9.54 10.2 9.72 9.68 9.35 9.89 9.70 9.67 9.87

2 1053_at 7.62 7.92 7.17 7.24 8.20 6.87 6.62 7.23 7.45

3 117_at 5.50 5.56 5.06 7.44 5.19 5.72 5.87 6.15 5.46

4 121_at 7.27 7.96 7.42 7.34 7.49 7.76 7.44 7.66 8.02

5 1255_g_at 2.79 3.10 2.78 2.91 3.02 2.73 2.78 3.56 2.83

6 1294_at 7.51 7.28 7.00 7.18 7.38 6.98 6.90 7.54 7.66

7 1316_at 3.89 4.36 4.24 3.94 4.20 4.34 4.06 4.24 4.11

8 1320_at 4.65 4.91 4.70 4.78 5.06 4.71 4.55 4.58 5.10

9 1405_i_at 8.03 7.47 5.42 7.21 9.48 6.79 6.57 8.50 6.36

10 1431_at 3.09 3.78 3.33 3.12 3.21 3.27 3.37 3.84 3.32

# ... with 54,665 more rows, and 26 more variables: GSM972433 <dbl>, GSM972438 <dbl>, GSM972440 <dbl>,

# GSM972443 <dbl>, GSM972444 <dbl>, GSM972446 <dbl>, GSM972453 <dbl>, GSM972457 <dbl>,

# GSM972459 <dbl>, GSM972463 <dbl>, GSM972467 <dbl>, GSM972472 <dbl>, GSM972473 <dbl>,

# GSM972475 <dbl>, GSM972476 <dbl>, GSM972477 <dbl>, GSM972479 <dbl>, GSM972480 <dbl>,

# GSM972487 <dbl>, GSM972488 <dbl>, GSM972489 <dbl>, GSM972496 <dbl>, GSM972502 <dbl>,

# GSM972510 <dbl>, GSM972512 <dbl>, GSM972521 <dbl>The file has 54,676 rows, consisting of one header row which R loads in as the

column names, and the remaining are probe sets, one per row. There are 36

columns, where the first contains the probe set ID (e.g. 1007_s_at) and the

remaining 35 columns correspond to samples.

9.2.3 Biological data is NOT Tidy!

As mentioned in the tidy data section, the tidyverse packages assume data to be in so-called “tidy format”, with variables as columns and observations as rows. Unfortunately, certain forms of biological data are typically available in the opposite orientation - variables are in rows and observations are in columns. This is primarily true in feature data matrices, e.g. gene expression counts matrices, where the number of variables (e.g. genes) is much larger than the number of samples, which tend to be small very small compared with the number of features. This format is convenient for humans to interact with using, e.g. spreadsheet programs like Microsoft Excel, but can unfortunately make performing certain operations on them challenging in tidyverse.

For example, consider the microarray expression dataset in the previous section. Each of the 54,676 rows is a probeset, and each of the 35 numeric columns is a sample. This is a very large number of probesets to consider, especially if we plan to conduct a statistical test on each, which would impose a substantial multiple hypothesis testing burden on our results. We may therefore wish to eliminate probesets that have very low variance from the overall dataset, since these probesets are not likely to have a detectable statistical difference in our tests. However, computing the variance for each probeset is a computation across all columns, not on columns themselves, and this is not what tidyverse is designed to do well. Said differently, R and tidyverse do not operate by default on the rows of a data frame, tibble, or matrix.

Both base R and tidyverse are optimized to perform computations on columns, not rows. The reasons for this are buried in the details of how the R program itself was written to organize data internally and are beyond the scope of this book. The consequence of this design choice is that, while we can perform operations on the rows rather than the columns of a data structure, our code may perform very poorly (i.e. take a very long time to run).

When working with these datasets, we have a couple options to deal with this issue:

Pivot into long format. As described in the Rearranging Data section, we can rearrange our tibble to be more amenable to certain computations. In our earlier example, we wish to group all of our measurements by probeset and compute the variance of each, then possibly filter out probesets based on low variance. We can therefore combine

pivot_longer(),group_by(),summarize(), and finallyleft_join()to perform this operation. Exactly how to do this is left as an exercise in [Assignment 1].-

Compute row-wise statistics using

apply(). As described in Iteration, R is a functional programming language and implements iteration in a functional style using theapply()function. Theapply() functionaccepts aMARGINargument of 1 or 2 if the provided function is to be applied to the columns or rows, respectively. This method can be used to compute a summary statistic on each row of a tibble and the result saved back into the tibble using the column set operator:intensity_variance <- apply(intensities, 2, var) intensities$variance <- intensity_variance

9.3 Bioconductor

Bioconductor is an organized collection of strictly biological analysis methods packages for R. These packages are hosted and maintained outside of CRAN because the maintainers enforce a more rigorous set of coding quality, testing, and documentation standards than is required by normal R packages. These stricter requirements arose from a recognition that software packages are generally only as usable as their documentation allows them to be, and also that many if not most of the users of these packages are not statisticians or experienced computer programmers. Rather, they are people like us: biological analysis practitioners who may or may not have substantial coding experience but must analyze our data nonetheless. The excellent documentation and community support of the bioconductor ecosystem is a primary reason why R is such a popular language in biological analysis.

Bioconductor is divided into roughly two sets of packages: core maintainer packages and user contributed packages. The core maintainer packages are among the most critical, because they define a set of common objects and classes (e.g. the ExpressionSet class in the Biobase package) that all Bioconductor packages know how to work with. This common base provides consistency among all Bioconductor packages thereby streamlining the learning process. User contributed and maintained packages provide specialized functionality for specific types of analysis.

Bioconductor itself must be installed prior to installing other Bioconductor packages. To [install bioconductor], you can run the following:

if (!require("BiocManager", quietly = TRUE))

install.packages("BiocManager")

BiocManager::install(version = "3.14")Bioconductor undergoes regular releases indicated by a version, e.g. version = "3.14" at the time of writing. Every bioconductor package also has a version,

and each of those versions may or may not be compatible with a specific version

of Bioconductor. To make matters worse, Bioconductor versions are only

compatible with certain versions of R. Bioconductor version 3.14 requires R

version 4.1.0, and will not work with older R versions. These versions can cause

major version compatibility issues when you are forced to use older versions of

R, as may sometimes be the case on managed compute clusters. There is not a good

general solution for ensuring all your packages work together, but the general

best rule of thumb is to use the most current version of R and all packages at

the time when you write your code.

The BiocManager package is the only Bioconductor package installable using

install.packages(). After installing the BiocManager package, you may then

install bioconductor packages:

# installs the affy bioconductor package for microarray analysis

BiocManager::install("affy")One key aspect of Bioconductor packages is consistent and helpful documentation; every package page on the Bioconductor.org site lists a block of code that will install the package, e.g. for the affy package. In addition, Biconductor provides three types of documentation:

- Workflow tutorials on how to perform specific analysis use cases

- Package vignettes for every package, which provide a worked example of how to use the package that can be adapted by the user to their specific case

- Detailed, consistently formatted reference documentation that gives precise information on functionality and use of each package

The thorough and useful documentation of packages in Bioconductor is one of the reasons why the package ecosystem, and R, is so widely used in biology and bioinformatics.

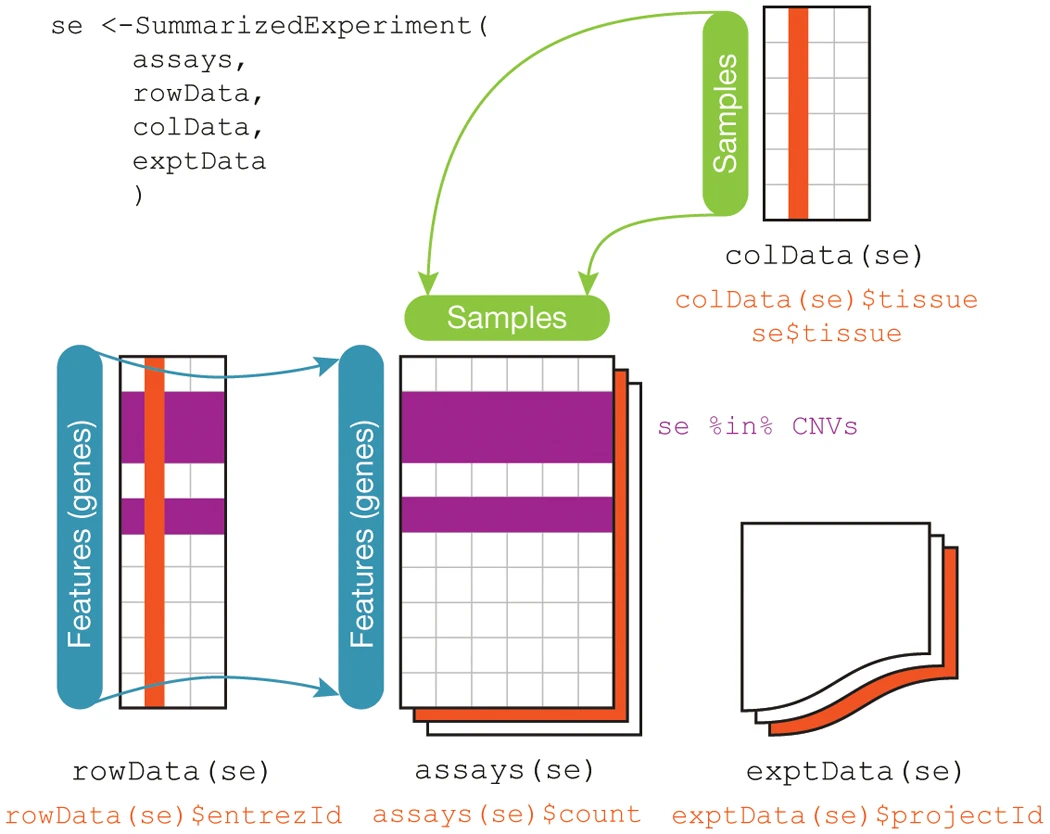

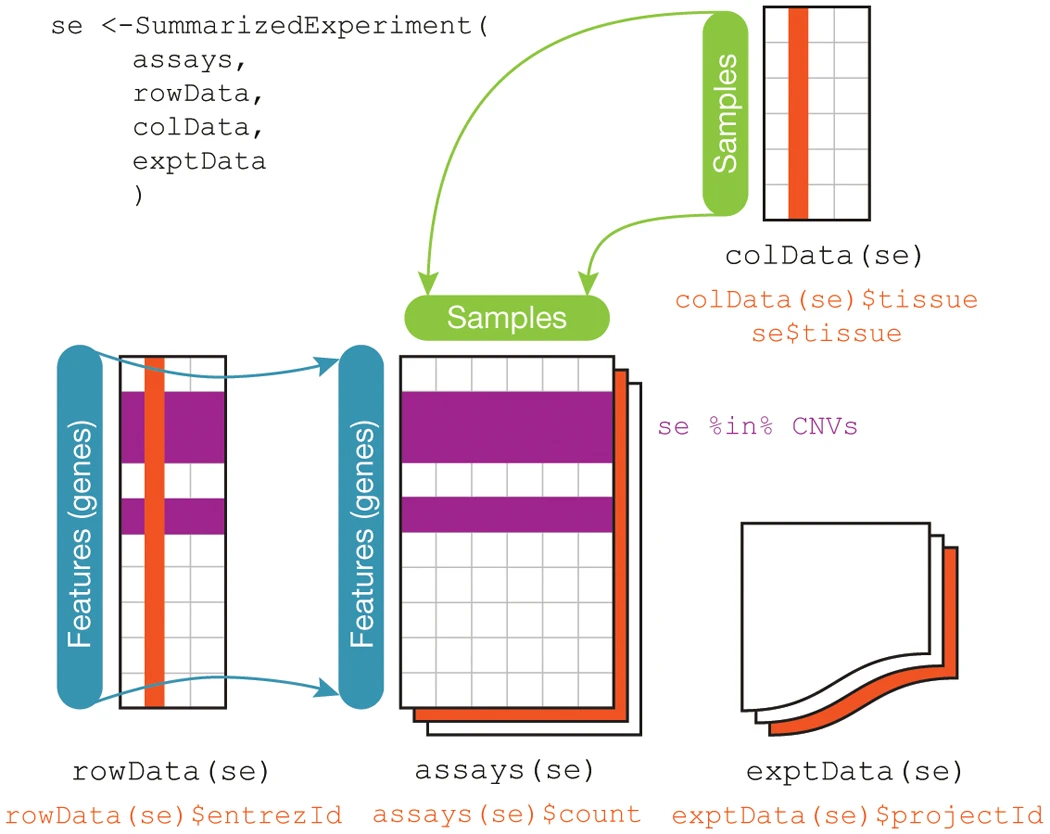

The base Bioconductor packages define convenient data structures for storing and analyzing many types of data. Recall earlier in the Types of Biological Data section, we described five types of biological data: primary, processed, analysis, metadata, and annotation data. Bioconductor provides a convenient class for storing many of these different data types together in one place, specifically the SummarizedExperiment class in the package of the same name package. An illustration of what a SummarizedExperiment object stores is below, from the Bioconductor maintainer’s paper in Nature:

As you can see from the figure, this class stores processed data (assays),

metadata (colData and exptData), and annotation data (rowData). The

SummarizedExperiment class is used ubiquitously throughout the Bioconductor

package ecosystem, as are other core classes some of which we will cover later

in this chapter.

9.4 Microarrays

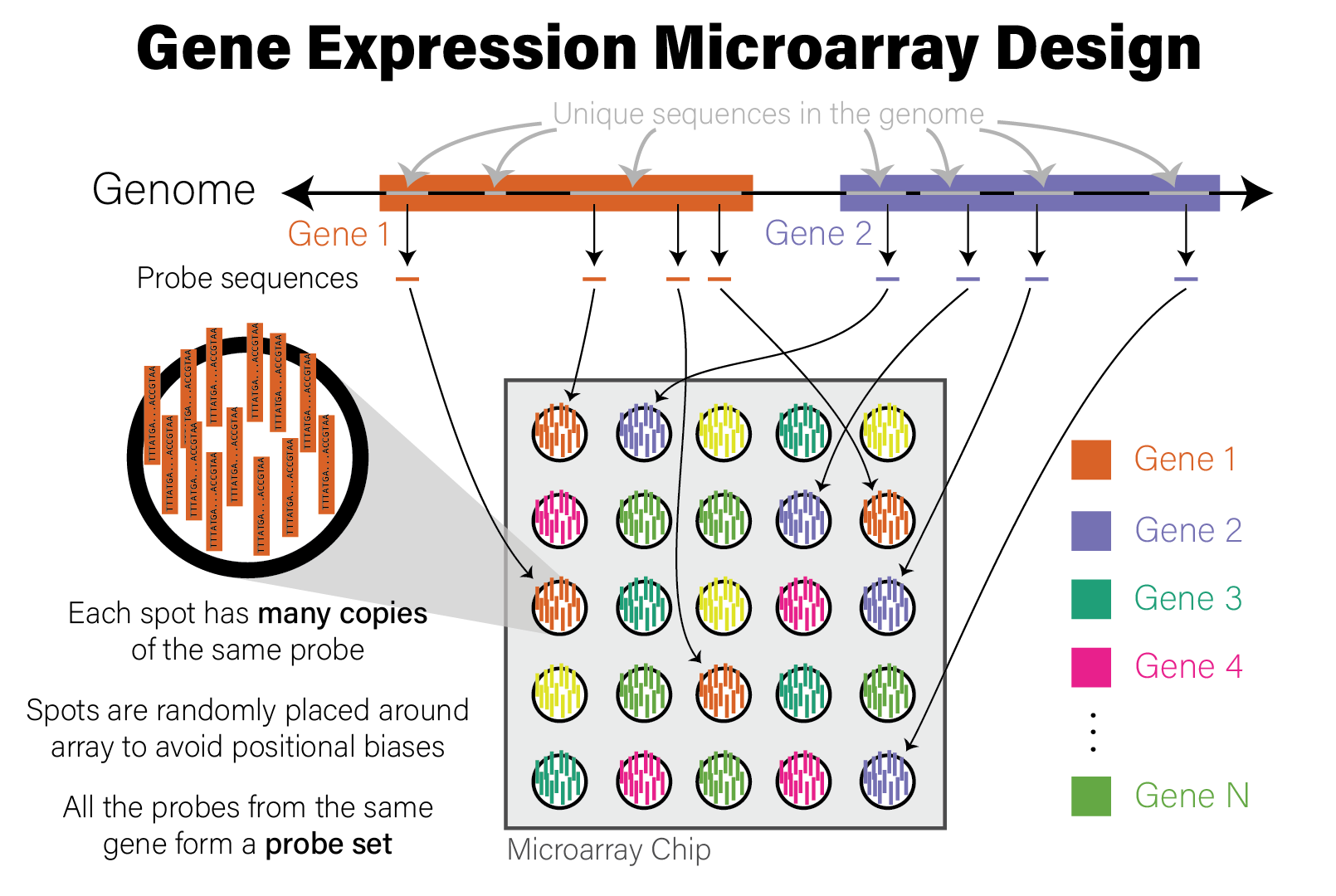

Microarrays are devices that measure the relative abundance of thousands of distinct DNA sequences simultaneously. Short (~25 nucleotide) single-stranded DNA molecules called probes are deposited on a small glass slide in a grid of spots, where each spot contains many copies of a probe with identical sequence. The probe sequences are selected a priori based on the purpose of the array. For example, gene expression microarrays have probes that correspond to the coding regions of the genome for a species of interest, while genotyping microarrays use sequences with known variants found in a population of genomes, most often the human population. Microarrays of the same type (e.g. human gene expression) all have the same set of probes. The choice of DNA sequence probes therefore determines what the microarray measures and how to interpret the data. The design of a microarray is illustrated in the following figure.

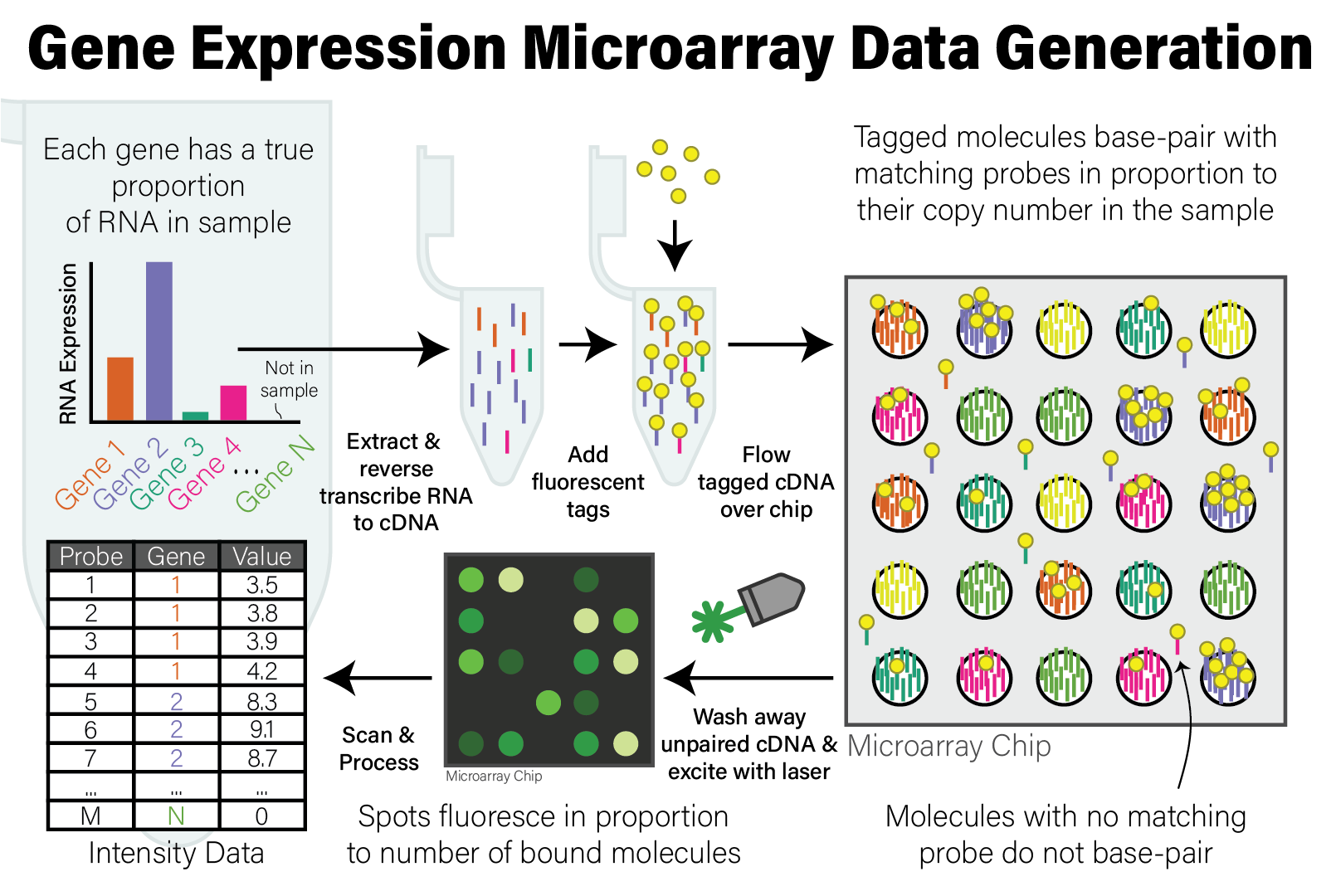

A microarray device generates data by applying a specially prepared DNA sample to it; the sample usually corresponds to a single biological specimen, e.g. an individual patient. The preparation method for the sample depends on what is being measured:

- When measuring DNA directly, e.g. genetic variants, the DNA itself is biochemically extracted

- When measuring gene expression via RNA abundance, RNA is first extracted and then reverse transcribed to complementary DNA (cDNA)

In either case, the molecules that will be applied to the microarray are DNA molecules. After extraction and preparation, the DNA molecules are then randomly cut up into shorter molecules (i.e. sheared) and each molecule has a molecular tag biochemically ligated to it that will emit fluorescence when excited by a specific wavelength of light. This tagged DNA sample is then washed over the microarray chip, where DNA molecules that share sequence complementarity with the probes on the array pair together. After this treatment, the microarray is washed to remove DNA molecules that did not have a match on the array, leaving only those molecules with a sequence match that remain bound to probes.

The microarray chip is then loaded into a scanning device, where a laser with a specific wavelength of light is then shone onto the array, causing the spots with tagged DNA molecules associated with probes to fluoresce, and other spots remain dark. A high resolution image is taken of the fluorescent array, and the image is analyzed to map the intensity of the light on each spot to a numeric value proportional to its intensity. The reason for this is that, since each spot has many individual probe molecules contained within it, the more copies of the corresponding DNA molecule were in the sample, the more light the spot emits. In this way, the relative abundance of all probes on the array are measured simultaneously. The process of generating microarray data from a sample is illustrated in the following figure.

After the microarray has been scanned, the relative copy number of the DNA in the sample matching the probes on the microarray are expressed as the intensity of fluorescence of each probe. The raw intensity data from the scan has been processed and analyzed by the scanner software to account for technical biases and artefacts of the scanning instrument and data generation process. The data from a single scan is processed and stored in a file in CEL format, a proprietary data format that stores the raw probe intensity data that can be loaded for downstream analysis.

9.5 High Throughput Sequencing

High throughput sequencing (HTS) technologies measure and digitize the nucleotide sequences of thousands to billions of DNA molecules simultaneously. In contrast to microarrays, which cannot identify new sequences due to their a priori probe based design, HTS instruments can in principle determine the sequence of any DNA molecule in a sample. For this reason, HTS datasets are sometimes called unbiased assays, because the sequences they identify are not predetermined.

The most popular HTS technology at the time of writing is provided by the Illumina biotechnology company. This technology was first developed and commercialized by the company Solexa during the late 1990s and was instrumental in the completion of the human genome. The cost of generating data with this technology has reduced by several orders of magnitude since then, resulting in an exponential growth of biological data and fundamentally changed our understanding of biology and health. Because of this, the analysis of these data, and not the time and cost of generation, has become the primary bottleneck in biological and biomedical research.

The Illumina HTS technology uses a biotechnological technique called sequencing by synthesis. Briefly, this technique entails biochemically ligating millions to billions of short (~300 nucleotide long) DNA molecules to a glass slide, denatured to become single stranded, and then used to synthesize the complementary strand by incorporating fluorescently tagged nucleotides. The tagged nucleotides that are incorporated on each nucleotide addition are excited with a laser and a high resolution photograph is taken of the flow cell. This process is repeated many times until the DNA molecules on the flow cell have been completely synthesized, and the collective images are computationally analyzed to build up the complete sequence of each molecule.

Other HTS methods have been developed that use different strategies, some of which do not utilize the sequencing by synthesis method. These alternative technologies differ in the length, number, confidence and per base cost of the DNA molecules they sequence. At the time of writing, the two most common technologies include:

- Pacific Biosciences (PacBio) Long Read Sequencing - this technology uses an engineered polymerase that performs run-on amplification of circularized DNA molecules and records a signature of each different base as it is incorporated into the amplification product. PacBio sequencing can generate reads that are thousands of nucleotides in length.

- Oxford Nanopore Sequencing (ONS) - this technology uses engineered proteins to form pores in an electrically conductive membrane, through which single stranded DNA molecules pass. As the individual nucleotides of a DNA molecule pass through the pore, the electrical current induced by the different nucleotide molecular structures is recorded and analyzed with signal processing algorithms to recover the original sequence of the molecule. No synthesis occurs in ONS technology.

Due to its widespread use, only Illumina sequencing data analysis is covered in this book, though additions in the future may be warranted.

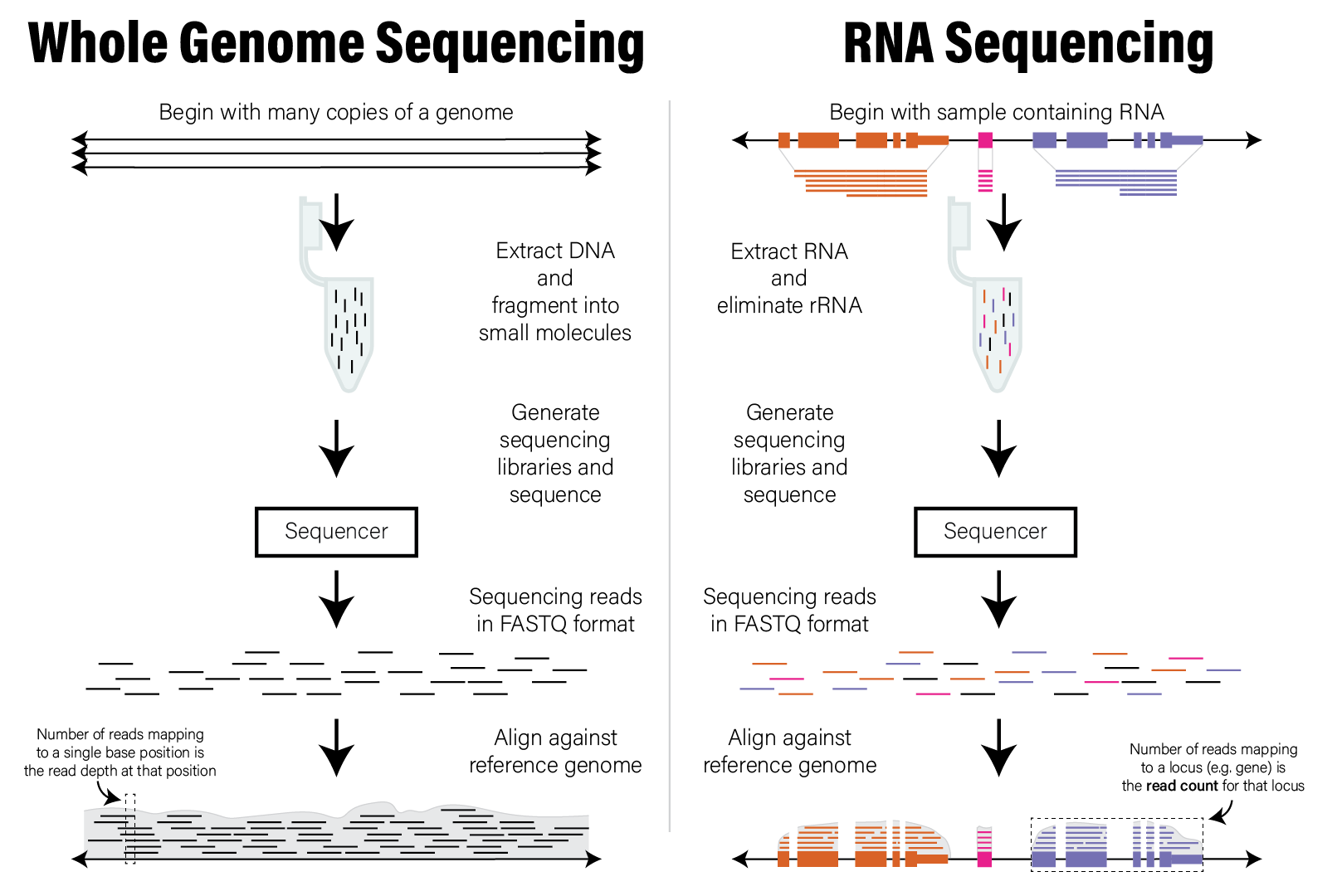

Any DNA molecules may be subjected to sequencing via this method; the meaning of the resulting datasets and the goal of subsequent analysis are therefore dependent upon the material being studied. Below are some of the most common types of HTS experiments:

- Whole genome sequencing (WGS) - the input to sequencing is randomly sheared genomic DNA molecules, from single organisms or a collection of organisms, with the goal of reconstructing or further refining the genome sequence of the constituent organisms

- RNA Sequencing (RNASeq) - the input is complementary DNA (cDNA) that has been reverse transcribed from RNA extracted from a sample, with the goal of detecting which RNA transcripts (and therefore genes) are expressed in that sample

- Whole genome bisulfite sequencing (WGBS) - the input to sequencing is randomly sheared genomic DNA that has undergone bisulfite treatment, which converts methylated cytosines to thymines, thereby enabling detection of epigenetic methylation marks in the DNA

- Chromatin immunoprecipitation followed by sequencing (ChIPSeq) - the input is genomic DNA that was bound by specific DNA-binding proteins (e.g. transcription factors), that allows identification of locations in the genome where those proteins were bound

A complete coverage of the many different types of sequencing assays is beyond the scope of this book, but analysis techniques used for of some of these different types of experiments are covered later in this chapter.

9.5.1 Raw HTS Data

The raw data produced by the sequencing instrument, or sequencer, are the digitized DNA sequences for each of the billions of molecules that were captured by the flow cell. The digitized nucleotide sequence for each molecule is called a read, and therefore every run of a sequencing instrument produces millions to billions of reads. The data is stored in a standardized format called the FASTQ file format. Data for a single read in FASTQ format is shown below:

@SEQ_ID

GATTTGGGGTTCAAAGCAGTATCGATCAAATAGTAAATCCATTTGTTCAACTCACAGTTT

+

!''*((((***+))%%%++)(%%%%).1***-+*''))**55CCF>>>>>>CCCCCCC65Each read has four lines in the FASTQ file. The first beginning with @ is the

sequencing header or name, which provides a unique identifier for the read in

the file. The second line is the nucleotide sequence as detected by the

sequencer. The third line is a secondary header line that starts with + and

may or may not include further information. The last line contains the phred

quality scores for the base

calls in the corresponding position of the read. Briefly, each character in the

phred quality score line has a corresponding integer is proportional to the

confidence that the base in the corresponding position of the read was called

correctly. This information is used to assess the quality of the data. See this

more in-depth explanation of phred

score for more information.

9.5.2 Preliminary HTS Data Analysis

Since all HTS experiments generate the same type of data (i.e. reads), all data analyses begin with processing of the sequences via bioinformatic algorithms; the choice of algorithm is determined by the experiment type and the biological question being asked. A full treatment of the variety of bioinformatic approaches to processing these data is beyond the scope of this book. We will focus on one analysis approach, alignment, that is commonly employed to produce derived data that is then further processed using R.

It is very common for HTS data, also called short read sequencing data, to be generated from organisms that have already had their genome sequenced, e.g. human or mouse. The reads in a dataset can therefore be compared with the appropriate genome sequence to aid in interpretation. This comparison amounts to searching in the organism’s genome, or the so-called reference genome, for the location or locations from which the molecule captured by each read originated. This search process is called alignment, where each short read sequence is aligned against the usually much larger reference genome to identify locations with identical or nearly identical sequence. Said differently, the alignment process attempts to assign each read to one or more locations in a reference genome, where only reads that have a match in the target genome will be assigned.

The alignment process is computationally intensive and requires specialized software to perform efficiently. While it may be technically capable of performing alignment, R is not optimized to process character data in the volume typical of these datasets. Therefore, it is not common to work with short read data (i.e. FASTQ files) directly in R, and other processing steps are required to transform the sequencing data into other forms that are then amenable to analysis in R.

The end result of aligning all the reads in a short read dataset is assignments of reads to positions across an entire reference genome. The distribution of those read alignment locations across the genome forms the basis of the data used to interpret the dataset. Specifically, each locus, or location spanning one or more bases, has zero or more reads aligned to it. The number of reads aligned to a locus, or the read count is commonly interpreted as being proportional to the number of those molecules found in the original sample. Therefore, the analysis and interpretation of HTS data commonly involves the analysis of count data, which we discuss in detail in the next section. The following figure illustrates the concepts described in this section.

9.5.3 Count Data

As mentioned above, the number of reads, or read counts that align to each locus of a genome form a distribution we can use to interpret the data. Count data have two important properties that require special attention:

- Count data are integers - the read counts assigned to a particular locus are whole numbers

- Counts are non-negative - counts can either be zero or positive; they cannot be negative

These two properties have the important consequence that count data are not normally distributed. Unlike with microarray data, common statistical techniques like linear regression are not directly applicable to count data. We must therefore account for this property of the data prior to statistical analysis in one of two ways:

- Use statistical methods that model counts data - generalized linear models that model data using Poisson and Negative Binomial distributions

- Transform the data to have be normally distributed - certain statistical transformations, such as regularized log or rank transformations can make counts data more closely follow a normal distribution

We will discuss these strategies in greater detail in the RNASeq Gene Expression Data section.

9.6 Gene Identifiers

9.6.1 Gene Identifier Systems

Since the first genetic sequence for a gene was determined in 1965 - an alanine tRNA in yeast(Holley et al. 1965) - scientists have been trying to give them names. In 1979, formal guidelines for the nomenclature used for human genes was proposed(Shows et al. 1979) along with the founding of the Human Gene Nomenclature Committee (Bruford et al. 2020), so that researchers would have a common vocabulary to refer to genes in a consistent way. In 1989, this committee was placed under the auspices of the Human Genome Organisation (HUGO), becoming the HUGO Gene Nomenclature Committee (HGNC). The HGNC has been the official body providing guidelines and gene naming authority since, and as such HGNC gene names are often called gene symbols.

As per Bruford et al. (2020), the official human gene symbol guidelines are as follows:

- Each gene is assigned a unique symbol, HGNC ID and descriptive name.

- Symbols contain only uppercase Latin letters and Arabic numerals.

- Symbols should not be the same as commonly used abbreviations

- Nomenclature should not contain reference to any species or “G” for gene.

- Nomenclature should not be offensive or pejorative.

Gene symbols are the most human-readable system for naming genes. The gene symbols BRCA1, APOE, and ACE2 may be familiar to you as they are involved in common diseases, namely breast cancer, Alzheimer’s Disease risk, and SARS-COV-2, respectively. Typically, the gene symbol is an acronym that roughly represents a label of what the gene is or does (or was originally found to be or do, as many genes are subsequently discovered to be involved in entirely separate processes as well), e.g. APOE represents the gene apolipoprotein E. This convention is convenient for humans when reading and identifying genes. However, standardized though the symbol conventions may be, they are not always easy for computers to work with, and ambiguities can cause mapping problems.

Other gene identifier systems developed along with other gene information databases. In 2000, the Ensembl genome browser was launched by the Wellcome Trust, a charitable foundation based in the United Kingdom, with the goal of providing automated annotation of the human genome. The Ensembl Project, which supports the genome browser, recognized even before the publication of the human genome that manual annotation and curation of genes is a slow and labor intensive process that would not provide researchers around the world timely access to information. The project therefore created a set of automated tools and pipelines to collect, process, and publish rapid and consistent annotation of genes in the human genome. Since its initial release, Ensembl now supports over 50,000 different genomes.

Ensembl assigns a automatic, systematic ID called the Ensembl Gene ID to every

gene in its database. Human Ensembl Gene IDs take the form ENSG plus an 11

digit number, optionally followed by a period delimited version number. For

example, at the time of writing the BRCA1 gene has an Ensembl Gene ID of

ENSG00000012048.23. The stable ID portion (i.e. ENSGNNNNNNNNNNN) will remain

associated with the gene forever (unless the gene annotation changes

“dramatically” in which case it is

retired). The .23 is the version

number of the gene annotation, meaning this gene has been updated 22 times (plus

its initial version) since addition to the database. The additional version

information is very important for reproducibility of biological analysis, since

conclusions drawn by results of these analyses are usually based on the most

current information about a gene which are continually updated over time.

Ensembl maintains annotations for many different organisms, and the gene identifiers for each genome contain codes that indicate which organism the gene is for. Here are the codes for orthologs of the body plan developmental gene HOXA1 in several different species:

| Gene ID Prefix | Organism | Symbol | Example |

|---|---|---|---|

ENSG |

Homo sapiens | HOXA1 | ENSG00000105991 |

ENSMUSG |

Mus musculus (mouse) | Hoxa1 | ENSMUSG00000029844 |

ENSDARG |

Danio rario (zebrafish) | hoxa1a | ENSDARG00000104307 |

ENSFCAG |

Felis catus (cat) | HOXA1 | ENSFCAG00000007937 |

ENSMMUG |

Macaca mulata (macaque) | HOXA1 | ENSMMUG00000012807 |

Ensembl Gene IDs and gene symbols are the most commonly used gene identifiers. The GENCODE project which provides consistent and stable genome annotation releases for human and mouse genomes, uses these two types of identifiers with its official annotations.

Ensembl provides stable identifiers for transcripts as well as genes. Transcript

identifiers correspond to distinct patterns of exons/introns that have been

identified for a gene, and each gene has one or more distinct transcripts.

Ensembl Transcript IDs have the form ENSTNNNNNNNNNNN.NN similar to Gene IDs.

Ensembl is not the only gene identifier system besides gene symbol. Several

other databases have devised and maintain their own identifiers, most notably

[Entrez Gene IDs](UCSC Gene IDs used by the NCBI Gene

database which assigns a unique integer to

each gene in each organism, and the Online Mendelian Inheritance in Man

(OMIM) database, which has identifiers that look like

OMIM:NNNNN, where each OMIM ID refers to a unique gene or human phenotype.

However, the primary identifiers used in modern human biological research remain

Ensembl IDs and official HGNC gene symbols.

9.6.2 Mapping Between Identifier Systems

A very common operation in biological analysis is to map between gene identifier systems, e.g. you are given Ensembl Gene ID and want to map to the more human-recognizable gene symbols. Ensembl provides a service called BioMart that allows you to download annotation information for genes, transcripts, and other information maintained in their databases. It also provides limited access to external sources of information on their genes, including cross references to HGNC gene symbols and some other gene identifier systems. The Ensembl website has helpful documentation on how to use BioMart to download annotation data using the web interface.

In addition to downloading annotations as a CSV file from the web interface, Ensembl also provides the biomaRt Bioconductor package to allow programmatic access to the same information directly from R. There is much more information in the Ensembl databases than gene annotation data that can be accessed via BioMart, but we will provide a brief example of how to extract gene information here:

# this assumes biomaRt is already installed through bioconductor

library(biomaRt)

# the human biomaRt database is named "hsapiens_gene_ensembl"

ensembl <- useEnsembl(biomart="ensembl", dataset="hsapiens_gene_ensembl")

# listAttributes() returns a data frame, turn into a tibble to help with formatting

as_tibble(listAttributes(ensembl))

# A tibble: 3,143 x 3

name description page

<chr> <chr> <chr>

1 ensembl_gene_id Gene stable ID feature_page

2 ensembl_gene_id_version Gene stable ID version feature_page

3 ensembl_transcript_id Transcript stable ID feature_page

4 ensembl_transcript_id_version Transcript stable ID version feature_page

5 ensembl_peptide_id Protein stable ID feature_page

6 ensembl_peptide_id_version Protein stable ID version feature_page

7 ensembl_exon_id Exon stable ID feature_page

8 description Gene description feature_page

9 chromosome_name Chromosome/scaffold name feature_page

10 start_position Gene start (bp) feature_page

# ... with 3,133 more rowsThe name column provides the programmatic name associated with the attribute

that can be used to retrieve that annotation information using the getBM()

function:

# create a tibble with ensembl gene ID, HGNC gene symbol, and gene description

gene_map <- as_tibble(

getBM(

attributes=c("ensembl_gene_id", "hgnc_symbol", "description"),

mart=ensembl

)

)

gene_map

# A tibble: 68,012 x 3

ensembl_gene_id hgnc_symbol description

<chr> <chr> <chr>

1 ENSG00000210049 MT-TF mitochondrially encoded tRNA-Phe (UUU/C) [Source:HGNC Symbol;Acc:HGNC:748~

2 ENSG00000211459 MT-RNR1 mitochondrially encoded 12S rRNA [Source:HGNC Symbol;Acc:HGNC:7470]

3 ENSG00000210077 MT-TV mitochondrially encoded tRNA-Val (GUN) [Source:HGNC Symbol;Acc:HGNC:7500]

4 ENSG00000210082 MT-RNR2 mitochondrially encoded 16S rRNA [Source:HGNC Symbol;Acc:HGNC:7471]

5 ENSG00000209082 MT-TL1 mitochondrially encoded tRNA-Leu (UUA/G) 1 [Source:HGNC Symbol;Acc:HGNC:7~

6 ENSG00000198888 MT-ND1 mitochondrially encoded NADH:ubiquinone oxidoreductase core subunit 1 [So~

7 ENSG00000210100 MT-TI mitochondrially encoded tRNA-Ile (AUU/C) [Source:HGNC Symbol;Acc:HGNC:748~

8 ENSG00000210107 MT-TQ mitochondrially encoded tRNA-Gln (CAA/G) [Source:HGNC Symbol;Acc:HGNC:749~

9 ENSG00000210112 MT-TM mitochondrially encoded tRNA-Met (AUA/G) [Source:HGNC Symbol;Acc:HGNC:749~

10 ENSG00000198763 MT-ND2 mitochondrially encoded NADH:ubiquinone oxidoreductase core subunit 2 [So~

# ... with 68,002 more rowsWith this Ensembl Gene ID to HGNC symbol mapping in hand, you can combine the tibble above with other tibbles containing gene information by joining them together.

BioMart/biomaRt is not the only ways to map different gene identifiers. The Bioconductor package AnnotateDbi also provides this functionality in a flexible format independent of the Ensembl databases. This package includes not only gene identifier mapping and information, but also identifiers from technology platforms, e.g. probe set IDs from microarrays, that can help when working with these types of data. The package also allows comprehensive and flexible homolog mapping. As with all Bioconductor packages, the AnnotationDbi documentation is well written and comprehensive, though knowledge of relational databases is helpful in understanding how the packages work.

9.6.3 Mapping Homologs

Sometimes it is necessary to link datasets from different organisms together by

orthology. For example, in an experiment performed in mice, we might be

interested in comparing gene expression patterns observed in the mouse samples

to a publicly available human dataset. In these contexts, we must link gene

identifiers from one organism to their corresponding homologs in the other.

BioMart enables us to extract these linked identifiers by explicitly connecting

different biomaRt

databases

with the getLDS() function:

human_db <- useEnsembl("ensembl", dataset = "hsapiens_gene_ensembl")

mouse_db <- useEnsembl("ensembl", dataset = "mmusculus_gene_ensembl")

orth_map <- as_tibble(

getLDS(attributes = c("ensembl_gene_id", "hgnc_symbol"),

mart = human_db,

attributesL = c("ensembl_gene_id", "mgi_symbol"),

martL = mouse_db

)

)

orth_map

# A tibble: 26,390 x 4

Gene.stable.ID HGNC.symbol Gene.stable.ID.1 MGI.symbol

<chr> <chr> <chr> <chr>

1 ENSG00000198695 MT-ND6 ENSMUSG00000064368 "mt-Nd6"

2 ENSG00000212907 MT-ND4L ENSMUSG00000065947 "mt-Nd4l"

3 ENSG00000279169 PRAMEF13 ENSMUSG00000094303 ""

4 ENSG00000279169 PRAMEF13 ENSMUSG00000094722 ""

5 ENSG00000279169 PRAMEF13 ENSMUSG00000095666 ""

6 ENSG00000279169 PRAMEF13 ENSMUSG00000094741 ""

7 ENSG00000279169 PRAMEF13 ENSMUSG00000094836 ""

8 ENSG00000279169 PRAMEF13 ENSMUSG00000074720 ""

9 ENSG00000279169 PRAMEF13 ENSMUSG00000096236 ""

10 ENSG00000198763 MT-ND2 ENSMUSG00000064345 "mt-Nd2"

# ... with 26,380 more rowsThe mgi_symbol field refers to the gene symbol assigned in the Mouse Genome

Informatics database

maintained by The Jackson Laboratory.

9.7 Gene Expression

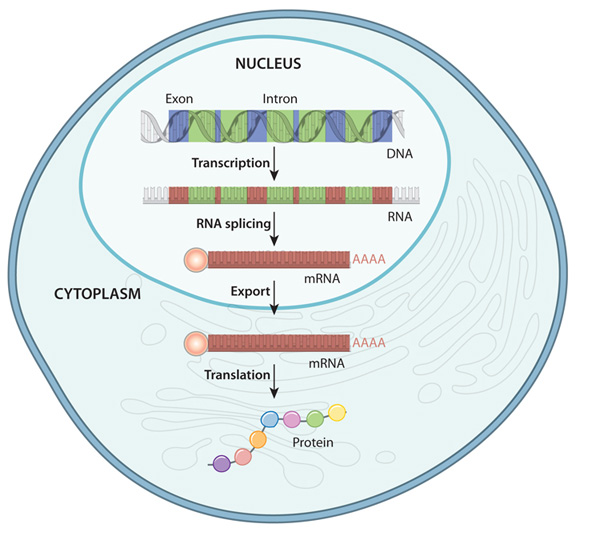

Gene expression is the process by which information from a gene is used in the synthesis of functional gene products that affect a phenotype. While gene expression studies are often focused on protein coding genes, there are many other functional molecules, e.g. transfer RNAs, lncRNAs, etc, that are produced by this process. The gene expression process has many steps and intermediate products, as depicted in the following figure:

The specific parts of the genome that code for genes are copied, or transcribed into RNA molecules called transcripts. In lower organisms like bacteria, these RNA molecules are passed on directly to ribosomes, which translate them into proteins. In most higher organisms like eukaryotes, these initial transcripts, called pre-messenger RNA (pre-mRNA) transcripts are further processed such that certain parts of the transcript, called introns, are spliced out and the flanking sequences, called exons, are concatenated together. After this splicing process is complete, the pre-mRNA transcripts become mature messenger RNA (mRNA) transcripts which go on to be exported from the nucleus and loaded into ribosomes in the cytoplasm to undergo translation into proteins.

In gene expression studies, the relative abundance, or number of copies, of RNA or mRNA transcripts in a sample is measured. The measurements are non-negative numbers that are proportional to the relative abundance of a transcript with respect to some reference, either another gene, or in the case of high throughput assays like microarrays or high throughput sequencing, relative to measurements of all distinct transcripts examined in the experiment. Conceptually, the larger the magnitude of a transcript abundance measurement, the more copies of that transcript were in the original sample.

There are many ways to measure the abundance of RNA transcripts; the following are some of the common methods at the time of writing:

- Light absorbance - RNA absorbs ultraviolet light with wavelength 260 nm, which can be used to determine RNA concentration in a sample using specialized equipment

- Northern blot - measures relative abundance of an RNA with a specific sequence

- quantitative polymerase chain reaction (qPCR) - measures relative abundance of an RNA with a specific sequence using PCR amplification

- Oligonucleotide and microarrays - measures relative abundance of thousands of genes simultaneously using known DNA probe sequences and fluorescently tagged RNA molecules

- High throughput RNA sequencing (RNASeq) - measures thousands to millions of RNA fragments simultaneously in proportion to their relative abundance

While any of the measurement methods above may be analyzed in R, the high throughput methods (i.e. microarray, high throughput sequencing) are the primary concern of this chapter. These methods generate measurements for thousands of transcripts or genes simultaneously, requiring the power and flexibility of programmatic analysis to process in a practical amount of time. The remainder of this chapter is devoted to understanding the specific technologies, data types, and analytical strategies involved in working with these data.

Gene expression measurements are almost always inherently relative, either due to limitations of the measurement methods (e.g. microarrays, described below) or because measuring all molecules in a sample will almost always be prohibitively expensive, have diminishing returns, and it is very difficult if not impossible to determine if all the molecules have been measured. This means we cannot in general associate the numbers associated with the measurements with an absolute molecular copy number. An important implication of the inherent relativity of these measurements is: absence of evidence is not evidence of absence. In other words, if a transcript has an abundance measurement of zero, this does not necessarily imply that the gene is not expressed. It may be that the gene is indeed expressed, but the copy number is sufficiently small that it was not detected by the assay.

9.7.1 Gene Expression Data in Bioconductor

The SummarizedExperiment container is the standard way to load and work with gene expression data in Bioconductor. This container requires the following information:

-

assays- one or more measurement assays (e.g. gene expression) in the form of a feature by sample matrix -

colData- metadata associated with the samples (i.e. columns) of the assays -

rowData- metadata associated with the features (i.e. rows) of the assays -

exptData- additional metadata about the experiment itself, like protocol, project name, etc

The figure below illustrates how the SummarizedExperiment container is structured and how the different data elements are accessed:

Many Bioconductor packages for specific types of data, e.g. limma create these SummarizedExperiment objects for you, but you may also create your own by assembling each of these data into data frames manually:

# microarray expression dataset intensities

intensities <- readr::read_delim("example_intensity_data.csv",delim=" ")

# the first column of intensities tibble is the probesetId, extract to pass as rowData

rowData <- intensities["probeset_id"]

# remove probeset IDs from tibble and turn into a R dataframe so that we can assign rownames

# since tibbles don't support row names

intensities <- as.data.frame(

select(intensities, -probeset_id)

)

rownames(intensities) <- rowData$probeset_id

# these column data are made up, but you would have a sample metadata file to use

colData <- tibble(

sample_name=colnames(intensities),

condition=sample(c("case","control"),ncol(intensities),replace=TRUE)

)

se <- SummarizedExperiment(

assays=list(intensities=intensities),

colData=colData,

rowData=rowData

)

se## class: SummarizedExperiment

## dim: 54675 35

## metadata(0):

## assays(1): intensities

## rownames(54675): 1007_s_at 1053_at ... AFFX-TrpnX-5_at AFFX-TrpnX-M_at

## rowData names(1): probeset_id

## colnames(35): GSM972389 GSM972390 ... GSM972512 GSM972521

## colData names(2): sample_name conditionDetailed documentation of how to create and use the SummarizedExperiment is available in the SummarizedExperiment vignette.

SummarizedExperiment is the successor to the older ExpressionSet container. Both are still used by Bioconductor packages, but SummarizedExperiment is more modern and flexible, so it is suggested for use whenever possible.

9.7.2 Differential Expression Analysis

Differential expression analysis seeks to identify to what extent gene expression is associated with one or more variables of interest. For example, we might be interested in genes that have higher expression in subjects with a disease, or which genes change in response to a treatment.

Typically, gene expression analysis requires two types of data to run: an

expression matrix and a design matrix. The expression matrix will generally

have features (e.g. genes) as rows and samples as columns. The design matrix is

a numeric matrix that contains the variables we wish to model and any covariates

or confounders we wish to adjust for. The variables in the design matrix then

must be encoded in a way that statistical procedures can understand. The full

details of how design matrices are constructed is beyond the scope of this book.

Fortunately, R makes it very easy to construct these matrices from a tibble with

the model.matrix()

function.

Consider for the following imaginary sample information tibble that has 10 AD

and 10 Control subjects with different clinical and protein histology

measurements assayed on their brain tissue:

ad_metadata## # A tibble: 20 × 8

## ID age_at_death condition tau abeta iba1 gfap braak_stage

## <chr> <dbl> <fct> <dbl> <dbl> <dbl> <dbl> <fct>

## 1 A1 73 AD 96548 176324 157501 78139 4

## 2 A2 82 AD 95251 0 147637 79348 4

## 3 A3 82 AD 40287 45365 17655 127131 2

## 4 A4 81 AD 82833 181513 225558 122615 4

## 5 A5 84 AD 73807 69332 47247 46968 3

## 6 A6 81 AD 69108 139420 57814 24885 3

## 7 A7 91 AD 138135 209045 101545 32704 6

## 8 A8 88 AD 63485 104154 80123 31620 3

## 9 A9 74 AD 59485 148125 27375 50549 3

## 10 A10 69 AD 48357 27260 47024 78507 2

## 11 C1 80 Control 62684 93739 131595 124075 3

## 12 C2 77 Control 63598 69838 7189 35597 3

## 13 C3 72 Control 52951 19444 54673 17221 2

## 14 C4 75 Control 12415 7451 16481 38510 0

## 15 C5 77 Control 62805 0 40419 108479 3

## 16 C6 77 Control 1615 3921 2439 35430 0

## 17 C7 73 Control 62233 77113 81887 96024 3

## 18 C8 77 Control 59123 94715 72350 76833 3

## 19 C9 80 Control 21705 3046 26768 113093 1

## 20 C10 73 Control 15781 16592 10271 100858 1We might be interested in identifying genes that are increased or decreased in people with AD compared with Controls. We can create a model matrix for this as follows:

model.matrix(~ condition, data=ad_metadata)## (Intercept) conditionAD

## 1 1 1

## 2 1 1

## 3 1 1

## 4 1 1

## 5 1 1

## 6 1 1

## 7 1 1

## 8 1 1

## 9 1 1

## 10 1 1

## 11 1 0

## 12 1 0

## 13 1 0

## 14 1 0

## 15 1 0

## 16 1 0

## 17 1 0

## 18 1 0

## 19 1 0

## 20 1 0

## attr(,"assign")

## [1] 0 1

## attr(,"contrasts")

## attr(,"contrasts")$condition

## [1] "contr.treatment"The ~ condition argument is an an R

forumla,

which is a concise description of how variable should be included in the model.

The general format of a formula is as follows:

# portions in [] are optional

[<outcome variable>] ~ <predictor variable 1> [+ <predictor variable 2>]...

# examples

# model Gene 3 expression as a function of disease status

`Gene 3` ~ condition

# model the amount of tau pathology as a function of abeta pathology,

# adjusting for age at death

tau ~ age_at_death + abeta

# create a model without an outcome variable that can be used to create the

# model matrix to test for differences with disease status adjusting for age at death

~ age_at_death + conditionFor most models, the design matrix will have an intercept term of all ones and

additional colums for the other variables in the model. We might wish to adjust

out the effect of age by including age_at_death as a covariate in the model:

model.matrix(~ age_at_death + condition, data=ad_metadata)## (Intercept) age_at_death conditionAD

## 1 1 73 1

## 2 1 82 1

## 3 1 82 1

## 4 1 81 1

## 5 1 84 1

## 6 1 81 1

## 7 1 91 1

## 8 1 88 1

## 9 1 74 1

## 10 1 69 1

## 11 1 80 0

## 12 1 77 0

## 13 1 72 0

## 14 1 75 0

## 15 1 77 0

## 16 1 77 0

## 17 1 73 0

## 18 1 77 0

## 19 1 80 0

## 20 1 73 0

## attr(,"assign")

## [1] 0 1 2

## attr(,"contrasts")

## attr(,"contrasts")$condition

## [1] "contr.treatment"The model matrix now includes a column for age at death as well. This model model matrix is now suitable to pass to differential expression software packages to look for genes associated with disease status.

As mentioned above, modern gene expression assays measure thousands of genes simultaneously. This means each gene must be tested for differential expression individually. In general, each gene is tested using the same statistical model, so a differential expression analysis package will perform something equivalent to the following:

Gene 1 ~ age_at_death + condition

Gene 2 ~ age_at_death + condition

Gene 3 ~ age_at_death + condition

...

Gene N ~ age_at_death + conditionEach gene model will have its own statistics associated with it that we must process and interpret after the analysis is complete.

9.7.3 Microarray Gene Expression Data

On a high level, there are four steps involved in analyzing gene expression microarray data:

- Summarization of probes to probesets. Each gene is represented by multiple probes with different sequences. Summarization is a statistical procedure that computes a single value for each probeset from its corresponding probes.

- Normalization. This includes removing background signal from individual arrays as well as adjusting probeset intensities so that they are comparable across multiple sample arrays.

- Quality control. Compare all normalized samples within a sample set to identify, mitigate, or eliminate potential outlier samples.

- Analysis. Using the quality controlled expression data, Implement statistical analysis to answer research questions.

The full details of microarray analysis are beyond the scope of this book. However in the following sections we cover some of the basic entry points to performing these steps in R and Bioconductor.

The CEL data files from a set of microarrays can be loaded into R for analysis

using the affy Bioconductor

package.

This package provides all the functions necessary for loading the data and

performing key preprocessing operations. Typically, two or more samples were

processed in this way, resulting in a set of CEL files that should be processed

together. These CEL files will typically be all stored in the same directory,

and may be loaded using the affy::ReadAffy function:

# read all CEL files in a single directory

affy_batch <- affy::ReadAffy(celfile.path="directory/of/CELfiles")

# or individual files in different directories

cel_filenames <- c(

list.files( path="CELfile_dir1", full.names=TRUE, pattern=".CEL$" ),

list.files( path="CELfile_dir2", full.names=TRUE, pattern=".CEL$" )

)

affy_batch <- affy::ReadAffy(filenames=cel_filenames)The affy_batch variable is a AffyBatch container, which stores information

on the probe definitions based on the type of microarray, probe-level intensity

for each sample, and other information about the experiment contained within the

CEL files.

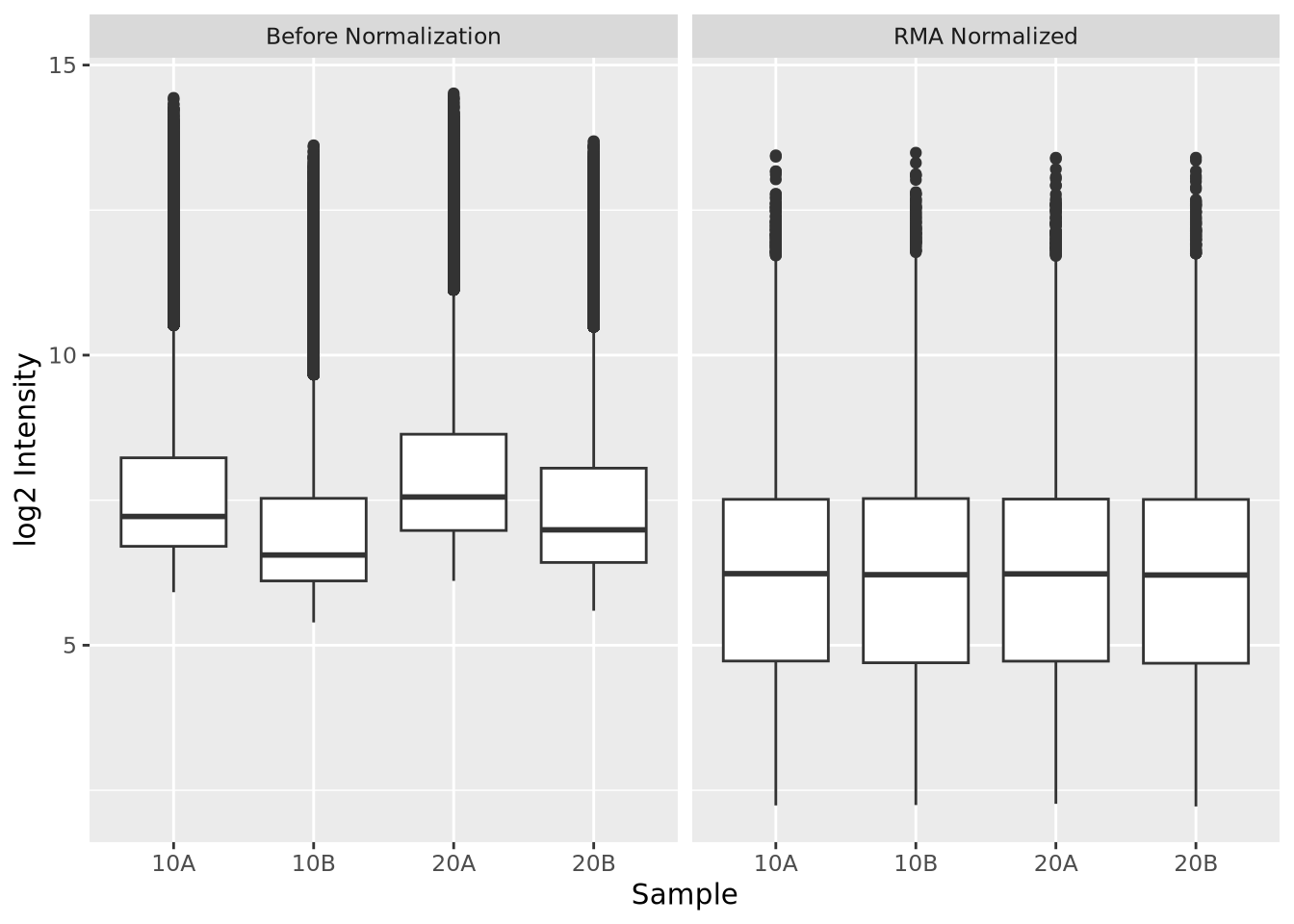

The affy package provides functions to perform probe summarization and

normalization. The most popular method to accomplish this at the time of writing

is called Robust Multi-array Average or RMA (Irizarry et al. 2003), which performs

summarization and normalization of multiple arrays simultaneously. Below, the

RMA algorithm is used to normalize an example dataset provided by the

affydata

Bioconductor package.

Certain Bioconductor packages provide example datasets for use with companion

analysis packages. For example, the affydata package provides the Dilution

dataset, which was generated using two concentrations of cDNA from human liver

tissue and a central nervous system cell line. To load a data package into R,

first run library(<data package>) and then data(<dataset name>).

library(affy)

library(affydata)

data(Dilution)

# normalize the Dilution microarray expression values

# note Dilution is an AffyBatch object

eset_rma <- affy::rma(Dilution,verbose=FALSE)## Package LibPath Item

## [1,] "affydata" "/opt/conda/lib/R/library" "Dilution"

## Title

## [1,] "AffyBatch instance Dilution"

data(Dilution)

# normalize the Dilution microarray expression values

# note Dilution is an AffyBatch object

eset_rma <- affy::rma(Dilution,verbose=FALSE)

# plot distribution of non-normalized probes

# note rma normalization takes the log2 of the expression values,

# so we must do so on the raw data to compare

raw_intensity <- as_tibble(exprs(Dilution)) %>%

mutate(probeset_id=rownames(exprs(Dilution))) %>%

pivot_longer(-probeset_id, names_to="Sample", values_to = "Intensity") %>%

mutate(

`log2 Intensity`=log2(Intensity),

Category="Before Normalization"

)

# plot distribution of normalized probes

rma_intensity <- as_tibble(exprs(eset_rma)) %>%

mutate(probesetid=featureNames(eset_rma)) %>%

pivot_longer(-probesetid, names_to="Sample", values_to = "log2 Intensity") %>%

mutate(Category="RMA Normalized")

dplyr::bind_rows(raw_intensity, rma_intensity) %>%

ggplot(aes(x=Sample, y=`log2 Intensity`)) +

geom_boxplot() +

facet_wrap(vars(Category))

Above, we first apply RMA to the Dilution microarray dataset using the

affy::rma() function. Then we plot the log 2 intensities of the probes in each

array, first using the raw intensities and then afysis seeking to

identify how associated gene expression is with one or more variables of

interest. For example,

9.7.4 Differential Expression: Microarrays (limma)

limma, which

is short for linear models of microarrays, is one of the top most

downloaded Bioconductor packages.

limma is utilized for analyzing microarray gene expression data, with a focus on

analyses using linear models to integrate all of the data from an experiment.

limma was developed for microarray analysis prior to the development of

sequencing based gene expression methods (i.e. RNASeq) but has since added

functionality to analyze other types of gene expression data.

limma excels at analyzing these types of data as it can support arbitrarily complex experimental designs while maintaining strong statistical power. An experiment with a large number of conditions or predictors can still be analyze even with small sample sizes. The method accomplishes this by using an empirical Bayes approach that borrows information across all the genes in the dataset to better control error in individual gene models. See Ritchie et al. (2015) for more details on how limma works.

limma requres an expression matrix like that stored in an ExpressionSet or SummarizedExperiment container and a design matrix:

# intensities is an example gene expression matrix that corresponds to our

# AD sample metadata of 10 AD and 10 Control individuals

ad_se <- SummarizedExperiment(

assays=list(intensities=intensities),

colData=ad_metadata,

rowData=rownames(intensities)

)

# define our design matrix for AD vs control, adjusting for age at death

ad_vs_control_model <- model.matrix(~ age_at_death + condition, data=ad_metadata)

# now run limma

# first fit all genes with the model

fit <- limma::lmFit(

assays(se)$intensities,

ad_vs_control_model

)

# now better control fit errors with the empirical Bayes method

fit <- limma::eBayes(fit)We have now conducted a differential expression analysis with limma. We can

extract out the results for our question of interest - which genes are

associated with AD - using the limma::topTable() function:

# the coefficient name conditionAD is the column name in the design matrix

# adjust="BH" means perform Benjamini-Hochberg multiple testing adjustment

# on the nominal p-values from each gene test

# topTable only returns the top 10 results sorted by ascending p-value by default

topTable(fit, coef="conditionAD", adjust="BH")## logFC AveExpr t P.Value adj.P.Val B

## 206354_at -2.7507429 5.669691 -5.349105 3.436185e-05 0.9992944 -2.387433

## 234942_s_at 0.9906708 8.397425 5.206286 4.726372e-05 0.9992944 -2.447456

## 243618_s_at -0.6879431 7.148455 -4.864695 1.020980e-04 0.9992944 -2.598600

## 223703_at 0.8693657 6.154392 4.745033 1.340200e-04 0.9992944 -2.654092

## 244391_at -0.5251423 5.532712 -4.691482 1.514215e-04 0.9992944 -2.679354

## 1554308_s_at -0.8622761 3.404188 -4.428740 2.763033e-04 0.9992944 -2.807091

## 1557179_s_at -0.2814909 2.767313 -4.379307 3.095237e-04 0.9992944 -2.831821

## 215238_s_at 0.4403151 4.970336 4.324162 3.513569e-04 0.9992944 -2.859664

## 237269_at -1.4862155 6.027691 -4.312331 3.610482e-04 0.9992944 -2.865672

## 212595_s_at 0.6459786 9.941364 4.303300 3.686273e-04 0.9992944 -2.870267From the table, none of the probesets in this experiment are significant at FDR < 0.05.

9.7.5 RNASeq

RNA sequencing (RNASeq) is a HTS technology that measures the abundance of RNA molecules in a sample. Briefly, RNA molecules are extracted from a sample using a biochemical protocol that isolates RNA from other molecules (i.e. DNA, proteins, etc) in the sample. Ribosomal RNA is removed from the sample (see Note box below), and the remaining RNA molecules are size selected to retain only short molecules (~15-300 nucleotides in length, depending on the protocol). The size selected molecules are then reverse transcribed into cDNA and converted to double stranded molecules. Sequencing libraries are prepared with these cDNA using proprietary biochemical protocols to make them suitable for sequencing on the sequencing instrument. After sequencing, FASTQ files containing millions of reads for one or more samples is produced. Except for the initial RNA extraction, all of these steps are typically performed by specialized instrumentation facilities called sequencing cores who provide the data in FASTQ format to the investigators.

80%-90% of the RNA mass in a cell is ribosomal RNA (rRNA), the RNA that makes up ribosomes and is responsible for maintaining protein translation processes in the cell. If total RNA was sequenced, the large majority of reads would correspond to rRNAs and would therefore be of little or no use. For this reason, rRNA molecules are removed from the RNA material that goes on to sequencing using either a poly-A tail enrichment or ribo-depletion strategy. These two methods maintain different populations of RNA and therefore sequencing datasets generated with different strategies require different interpretations. The more detail on this topic is beyond the scope of this book, but (Zhao et al. 2018) provide a good introduction and comparison of both approaches.

The reads produced in an RNASeq experiment represent RNA fragments from transcripts. The number of reads that correspond to specific transcripts is assumed to be proportional to the copy number of that transcript in the original sample. The alignment process described in the Preliminary HTS Data Analysis section produces alignments of reads against the genome or transcriptome, and the number of reads that align against each gene or transcript is counted using a reference annotation that defines the locations of every known gene in the genome. The resulting counts are therefore estimates of the copy number of the molecules for each gene or transcript in the original sample. Conceptually, the more reads that map to a gene, the higher the abundance of transcripts for that gene, and therefore we infer higher the expression of that gene.

Gene expression for different genes can vary by orders of magnitude within a single cell, where highly expressed genes may have billions of transcripts and others comparatively few. Therefore, the likelihood of obtaining a read for a given transcript is proportional to the relative abundance of that transcript compared with all other transcripts. Relatively rare transcripts have a low probability of being sampled by the sequencing procedure, and extremely lowly expressed genes may not be sampled at all. The library size (i.e. the total number of reads) in the sequencing dataset for a sample determines the range of gene expression the dataset is likely to detect, where datasets with few reads are unlikely to sample lowly expressed transcripts even though they are truly expressed. For this reason absence of evidence is not evidence of absence in RNASeq data. If a read is not observed for any given gene, we cannot say that the gene is not expressed; we can only say that the relative expression of that gene is below the detection threshold of the dataset we generated, given the number of sequenced reads.

For a single sample, the output of this read counting procedure is a vector of read counts for each gene in the annotation. Genes with no reads mapping to them will have a count of zero, and all others will have a read count of one or more; as mentioned in the Count Data section, read counts are typically non-zero integers. This procedure is typically repeated separately for a set of samples that make up the experiment, and the counts for each sample are concatenated together into a counts matrix. This count matrix is the primary result of interest that is passed on to downstream analysis, most commonly differential expression analysis.

9.7.6 RNASeq Gene Expression Data

The read counts for each gene or transcript in the genome are estimates of the relative abundance of molecules in the original sample. The number of genes counted depends on the organism being studied and the maturity of the gene annotation used. Complex multicellular organisms have on the order of thousands to tens of thousands of genes; for example, humans and mice have 20k-30k genes in their respective genomes. Each RNASeq experiment thus may yield tens of thousands of measurements for each sample.

We shall consider an example RNASeq dataset generated by (O’Meara et al. 2015) generated to study how mouse cardiac cells can regenerate up until one week of age, after which they lose the ability to do so. The dataset has eight RNASeq samples, two for each of four time points across the developmental window until adulthood. We will load in the expression data as a tibble:

counts <- read_tsv("verse_counts.tsv")

counts## # A tibble: 55,416 × 9

## gene P0_1 P0_2 P4_1 P4_2 P7_1 P7_2 Ad_1 Ad_2

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 ENSMUSG00000102693.2 0 0 0 0 0 0 0 0

## 2 ENSMUSG00000064842.3 0 0 0 0 0 0 0 0

## 3 ENSMUSG00000051951.6 19 24 17 17 17 12 7 5

## 4 ENSMUSG00000102851.2 0 0 0 0 1 0 0 0

## 5 ENSMUSG00000103377.2 1 0 0 1 0 1 1 0

## 6 ENSMUSG00000104017.2 0 3 0 0 0 1 0 0

## 7 ENSMUSG00000103025.2 0 0 0 0 0 0 0 0

## 8 ENSMUSG00000089699.2 0 0 0 0 0 0 0 0

## 9 ENSMUSG00000103201.2 0 0 0 0 0 0 0 1

## 10 ENSMUSG00000103147.2 0 0 0 0 0 0 0 0

## # ℹ 55,406 more rowsThe annotation counted reads for 55,416 annnotated Ensembl gene for the mouse genome, as described in the Gene Identifier Systems section.

There are typically three steps when analyzing a RNASeq count matrix:

- Filter genes that are unlikely to be differentially expressed.

- Normalize filtered counts to make samples comparable to one another.

- Differential expression analysis of filtered, normalized counts.

Each of these steps will be described in detail below.

9.7.7 Filtering Counts

Filtering genes that are not likely to be differentially expressed is an important step in differential expression analysis. The primary reason for this is to reduce the number of genes tested for differential expression, thereby reducing the multiple testing burden on the results. There are many approaches and rationales for picking filters, and the choice is generally subjective and highly dependent upon the specific dataset. In this section we discuss some of the rationale and considerations for different filtering strategies.

Genes that were not detected in any sample are the easiest to filter, and often eliminate many genes from consideration. Below we filter out all genes that have zero counts in all samples:

nonzero_genes <- rowSums(counts[-1])!=0

filtered_counts <- counts[nonzero_genes,]

filtered_counts## # A tibble: 32,613 × 9

## gene P0_1 P0_2 P4_1 P4_2 P7_1 P7_2 Ad_1 Ad_2

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 ENSMUSG00000051951.6 19 24 17 17 17 12 7 5

## 2 ENSMUSG00000102851.2 0 0 0 0 1 0 0 0

## 3 ENSMUSG00000103377.2 1 0 0 1 0 1 1 0

## 4 ENSMUSG00000104017.2 0 3 0 0 0 1 0 0

## 5 ENSMUSG00000103201.2 0 0 0 0 0 0 0 1

## 6 ENSMUSG00000103161.2 0 0 1 0 0 0 0 0

## 7 ENSMUSG00000102331.2 5 8 2 6 6 11 4 3

## 8 ENSMUSG00000102343.2 0 0 0 1 0 0 0 0

## 9 ENSMUSG00000025900.14 4 3 6 7 7 3 36 35

## 10 ENSMUSG00000102948.2 1 0 0 0 0 0 0 1

## # ℹ 32,603 more rowsOnly 32,613 genes remain after filtering out genes with all zeros, eliminating nearly 23,000 genes, or more than 40% of the genes in the annotation. Filtering genes with zeros in all samples as we have done above is somewhat liberal, as there may be many genes with reads in only one sample. Genes with only a single sample with a non-zero count also cannot be differentially expressed, so we can easily decide to eliminate those as well:

nonzero_genes <- rowSums(counts[-1])>=2

filtered_counts <- counts[nonzero_genes,]

filtered_counts## # A tibble: 28,979 × 9

## gene P0_1 P0_2 P4_1 P4_2 P7_1 P7_2 Ad_1 Ad_2

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 ENSMUSG00000051951.6 19 24 17 17 17 12 7 5

## 2 ENSMUSG00000103377.2 1 0 0 1 0 1 1 0

## 3 ENSMUSG00000104017.2 0 3 0 0 0 1 0 0

## 4 ENSMUSG00000102331.2 5 8 2 6 6 11 4 3

## 5 ENSMUSG00000025900.14 4 3 6 7 7 3 36 35

## 6 ENSMUSG00000102948.2 1 0 0 0 0 0 0 1

## 7 ENSMUSG00000025902.14 195 186 204 229 157 173 196 129

## 8 ENSMUSG00000104238.2 0 4 0 2 4 1 3 3

## 9 ENSMUSG00000102269.2 7 16 6 6 4 6 8 3

## 10 ENSMUSG00000118917.1 0 1 0 0 1 1 0 0

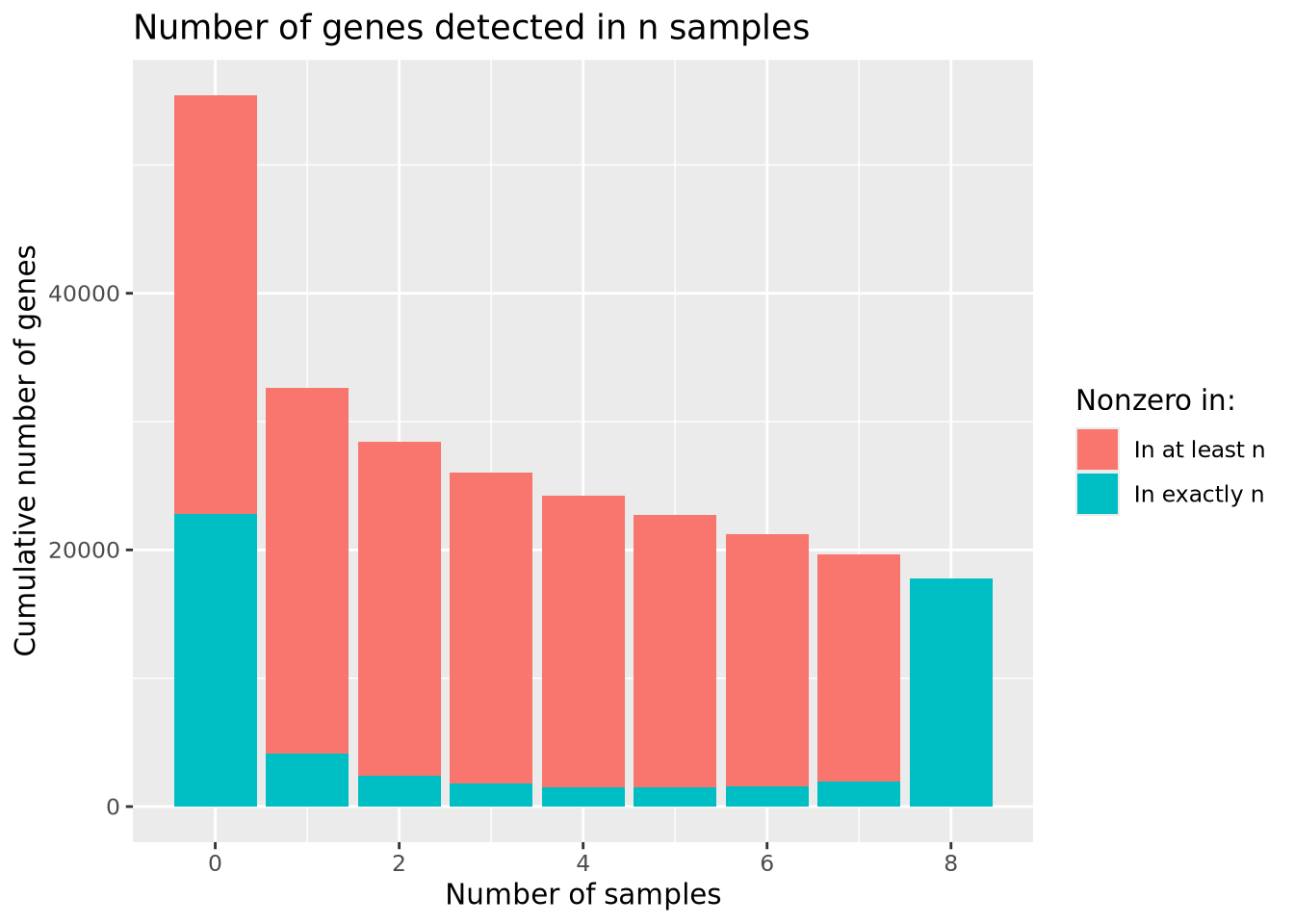

## # ℹ 28,969 more rowsWe have now reduced our number of genes to 28,979 by including only genes with at least two non-zero counts. We may now wonder how many genes we would include if we required at least \(n\) samples to have non-zero counts. Consider the following bar chart of the number of genes detected in at least \(n\) samples:

library(patchwork)

nonzero_counts <- mutate(counts,`Number of samples`=rowSums(counts[-1]!=0)) %>%

group_by(`Number of samples`) %>%

summarize(`Number of genes`=n() ) %>%

mutate(`Cumulative number of genes`=sum(`Number of genes`)-lag(cumsum(`Number of genes`),1,default=0))

sum_g <- ggplot(nonzero_counts) +

geom_bar(aes(x=`Number of samples`,y=`Cumulative number of genes`,fill="In at least n"),stat="identity") +

geom_bar(aes(x=`Number of samples`,y=`Number of genes`,fill="In exactly n"),stat="identity") +

labs(title="Number of genes detected in n samples") +

scale_fill_discrete(name="Nonzero in:")

sum_g

The largest number of genes is detected in none of the samples, followed by genes detected in all samples, with many fewer in between. Based on the plot, there is no obvious choice for how many nonzero samples are sufficient to use as a filter.

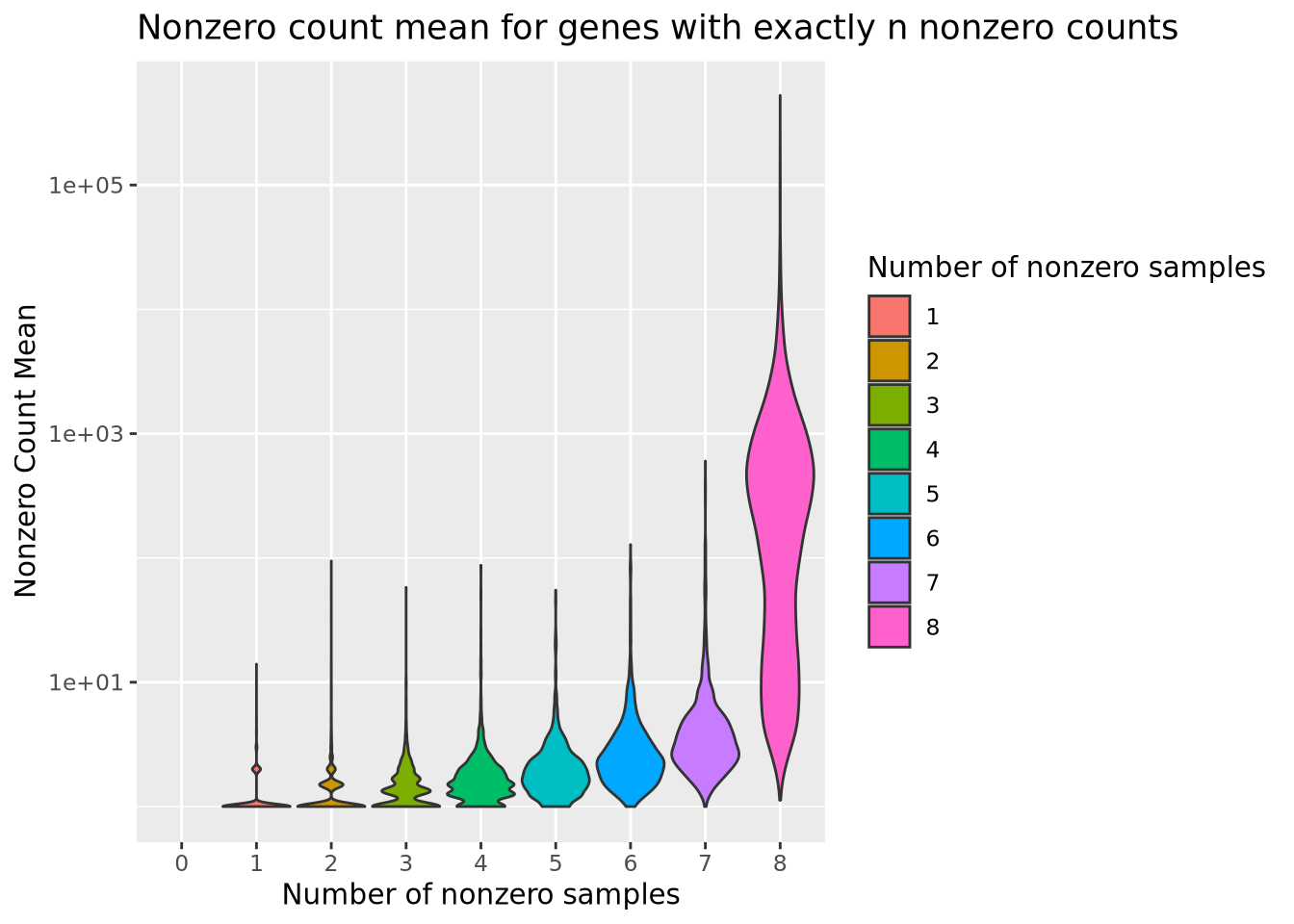

We may gain additional insight into this problem by considering the distribution of counts for genes present in at least \(n\) samples as above, rather than just the number of nonzero counts. Below is a plot similar to that above but, which plots the distribution of mean counts across samples within each number of nonzero samples:

# calculate the mean count for non-zero counts in genes with exactly n non-zero counts

mutate(counts,`Number of nonzero samples`=factor(rowSums(counts[-1]!=0))) %>%

pivot_longer(-c(gene,`Number of nonzero samples`),names_to="Sample",values_to="Count") %>%

group_by(`Number of nonzero samples`) %>%

group_by(gene, .add=TRUE) %>% summarize(`Nonzero Count Mean`=mean(Count[Count!=0]),.groups="keep") %>%

ungroup() %>%

ggplot(aes(x=`Number of nonzero samples`,y=`Nonzero Count Mean`,fill=`Number of nonzero samples`)) +

geom_violin(scale="width") +

scale_y_log10() +

labs(title="Nonzero count mean for genes with exactly n nonzero counts")

From this plot, we see that the mean count for genes with even a single sample with a zero is substantially lower than most genes without any zeros. We might therefore decide to filter out genes that have any zeros, with the rationale that most genes of appreciable abundance would still be included. However, there are some genes with a high average count for samples that are not zero that would be eliminated if we chose to use only genes expressed in all 8 samples. Again, there is no clear choice of filtering threshold, and a subjective decision is necessary.

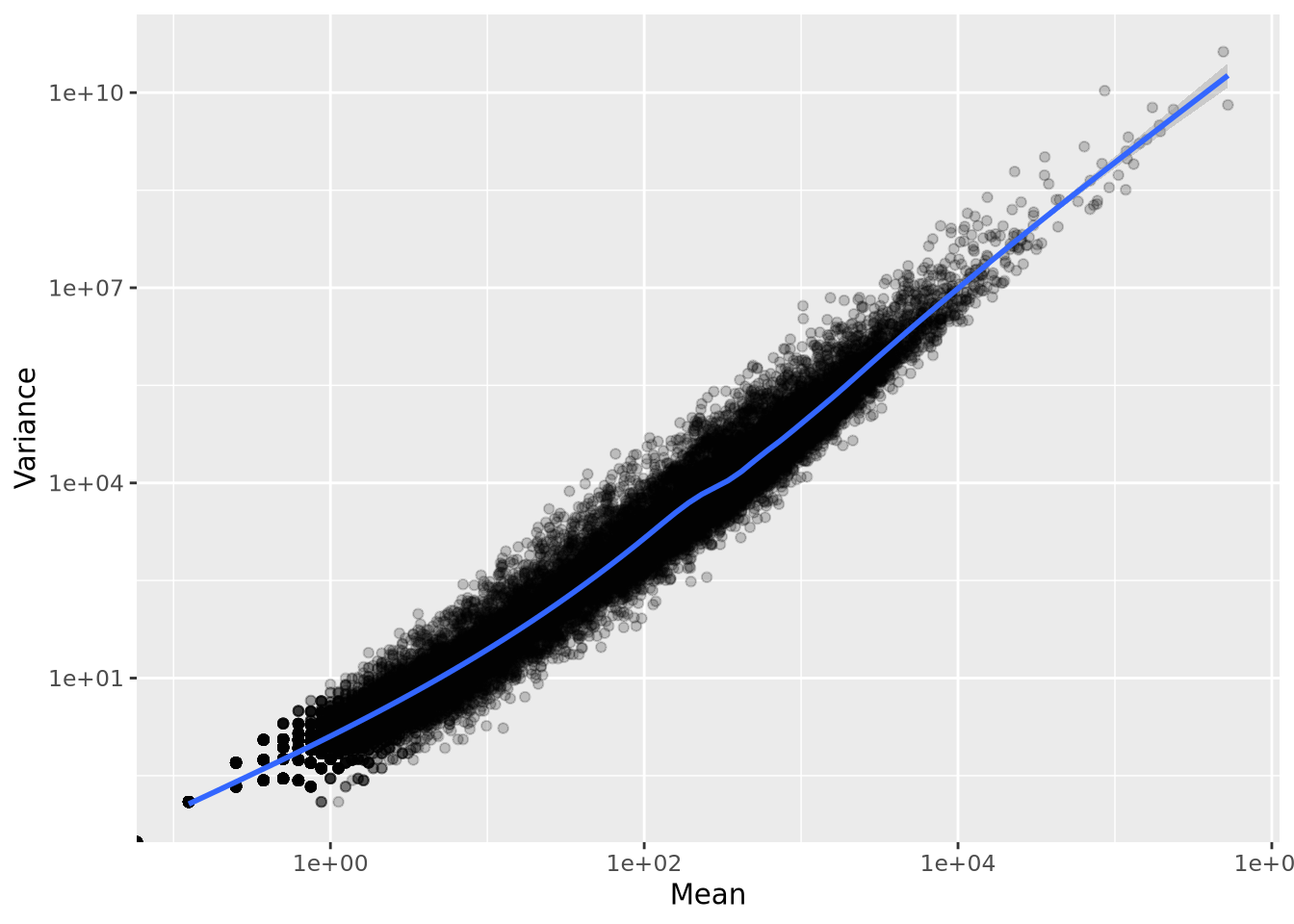

One important property of RNASeq expression data is that genes with higher mean count also have higher variance. This mean-variance dependence means these count data are heteroskedastic, a term used to describe data that has inconstant variance across different values. We can visualize this dependence by calculating the mean and variance for each of our normalized count genes and plotting them against each other:

Unlike in microarray data, where probesets can be filtered based on variance, using low variance as a filter for count data would result in filtering out genes with low mean, which would lead to undesirable bias toward highly expressed genes.

Depending on the experiment, it may be meaningful if a gene is detected at all in any of the samples. In these cases, genes with only 1 read in a single sample might be kept, even though these genes would not result in confident statistical inference if subjected to differential expression. The scientific question asked by the experiment is an important consideration when deciding thresholds.

When the goal of the RNASeq experiment is differential expression analysis, a good rule of thumb when choosing a filtering threshold is to choose genes that have sufficiently many non-zero counts such that the statistical method has enough variance to perform reliable inference. For example, a reasonable filtering threshold might be to include genes that have non-zero counts in at least 50% of the samples. This threshold depends only upon the number of samples being considered. In an experimental design with two or more sample groups, a reasonable strategy might be to require at least 50% of samples within at least one group or within all groups to be included. Taking the experimental design into account when filtering can ensure the most interesting genes, e.g. those expressed only in one condition, are captured.

Regardless of the filtering strategy used, there is no biological meaning to any filtering threshold. The number of reads detected for each gene is dependent primarily upon the library size, where larger libraries are more likely to sample lowly abundant genes. Therefore, we cannot filter genes with the intent of “removing lowly expressed genes”; instead we can only filter out genes that are below the detection threshold afforded by the library size of the dataset.

9.7.8 Count Distributions

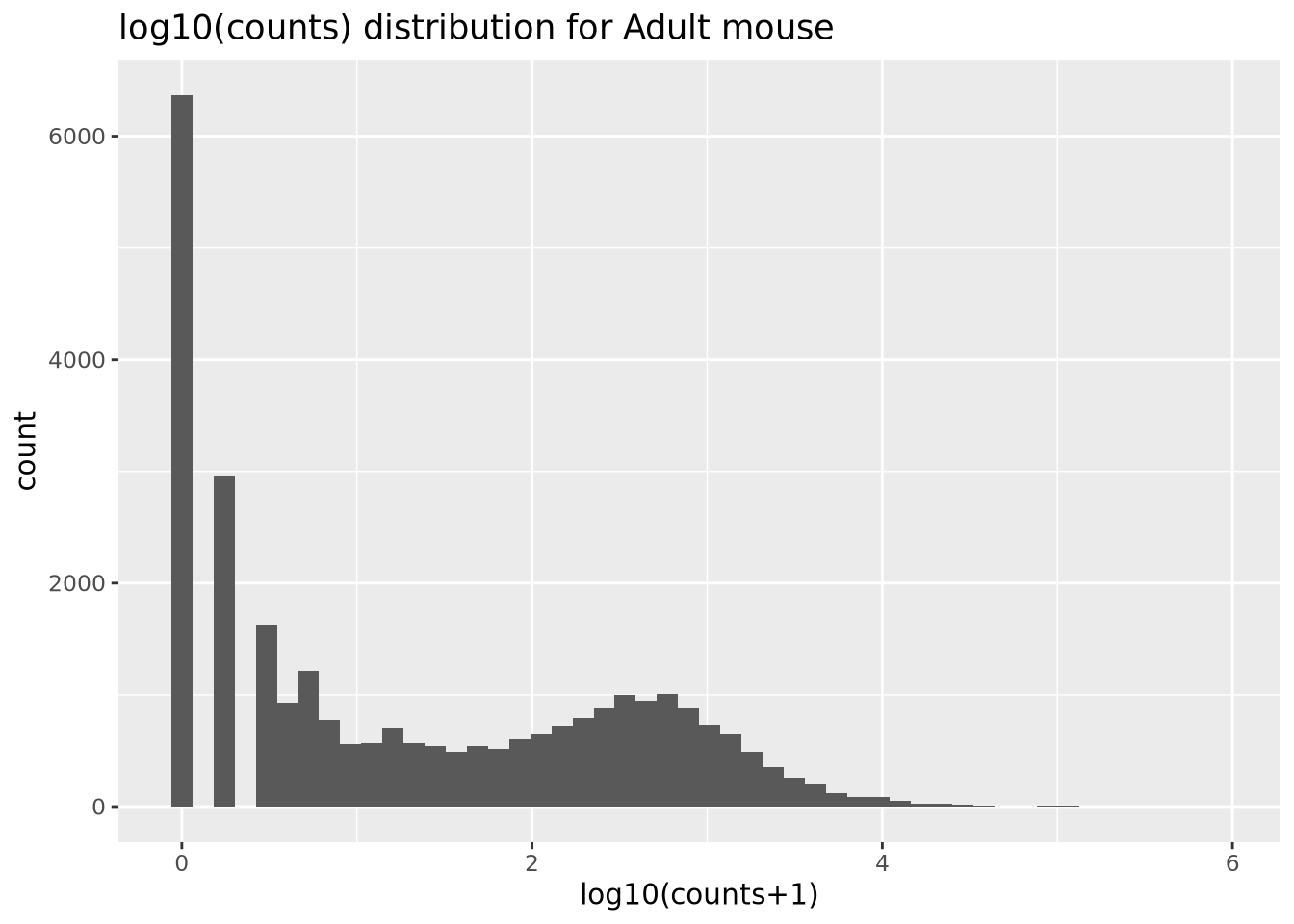

The range of gene expression in a cell varies by orders of magnitude. The following histogram plots the distribution of read count values for one of the adult samples:

dplyr::select(filtered_counts, Ad_1) %>%

mutate(`log10(counts+1)`=log10(Ad_1+1)) %>%

ggplot(aes(x=`log10(counts+1)`)) +

geom_histogram(bins=50) +

labs(title='log10(counts) distribution for Adult mouse')

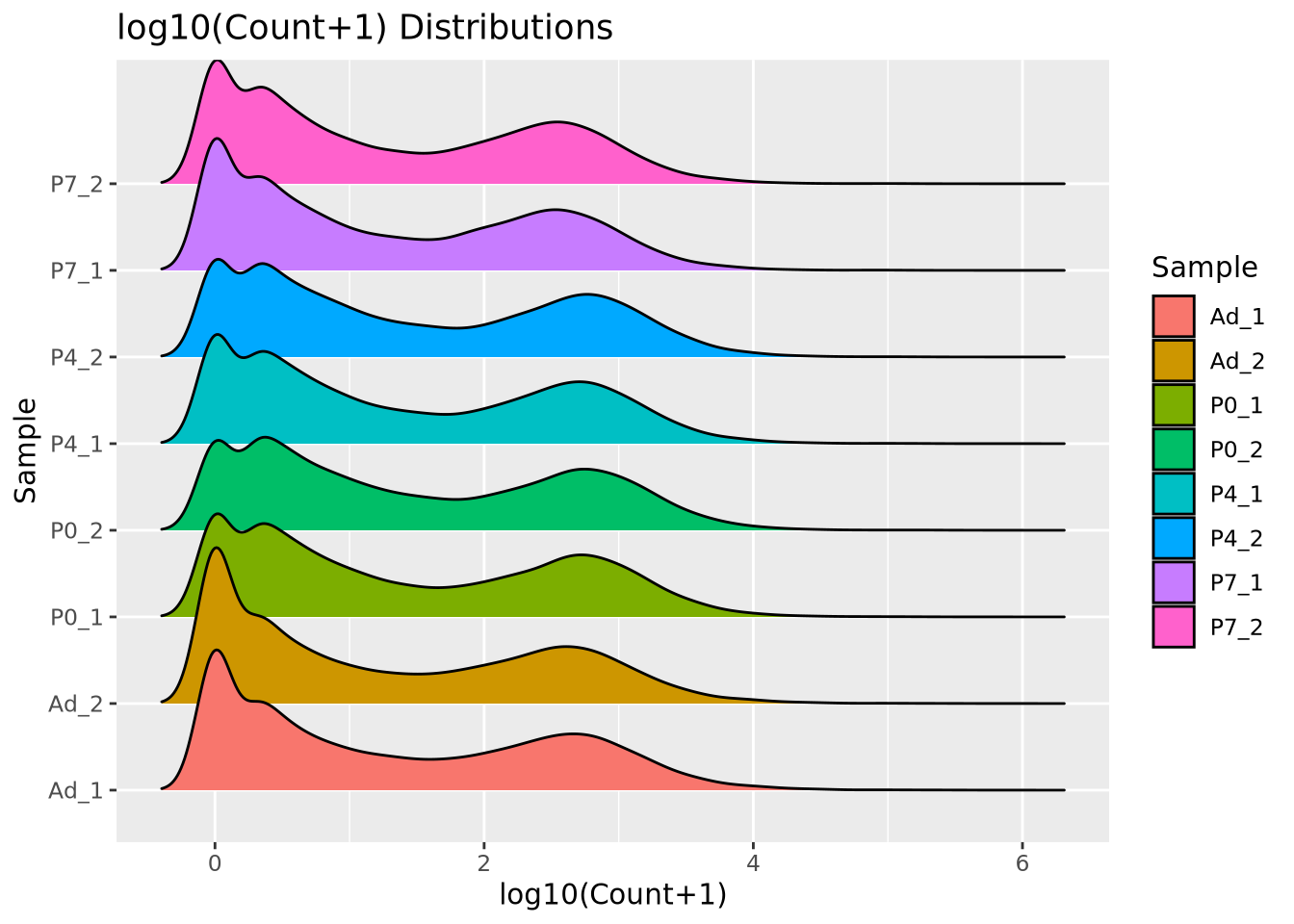

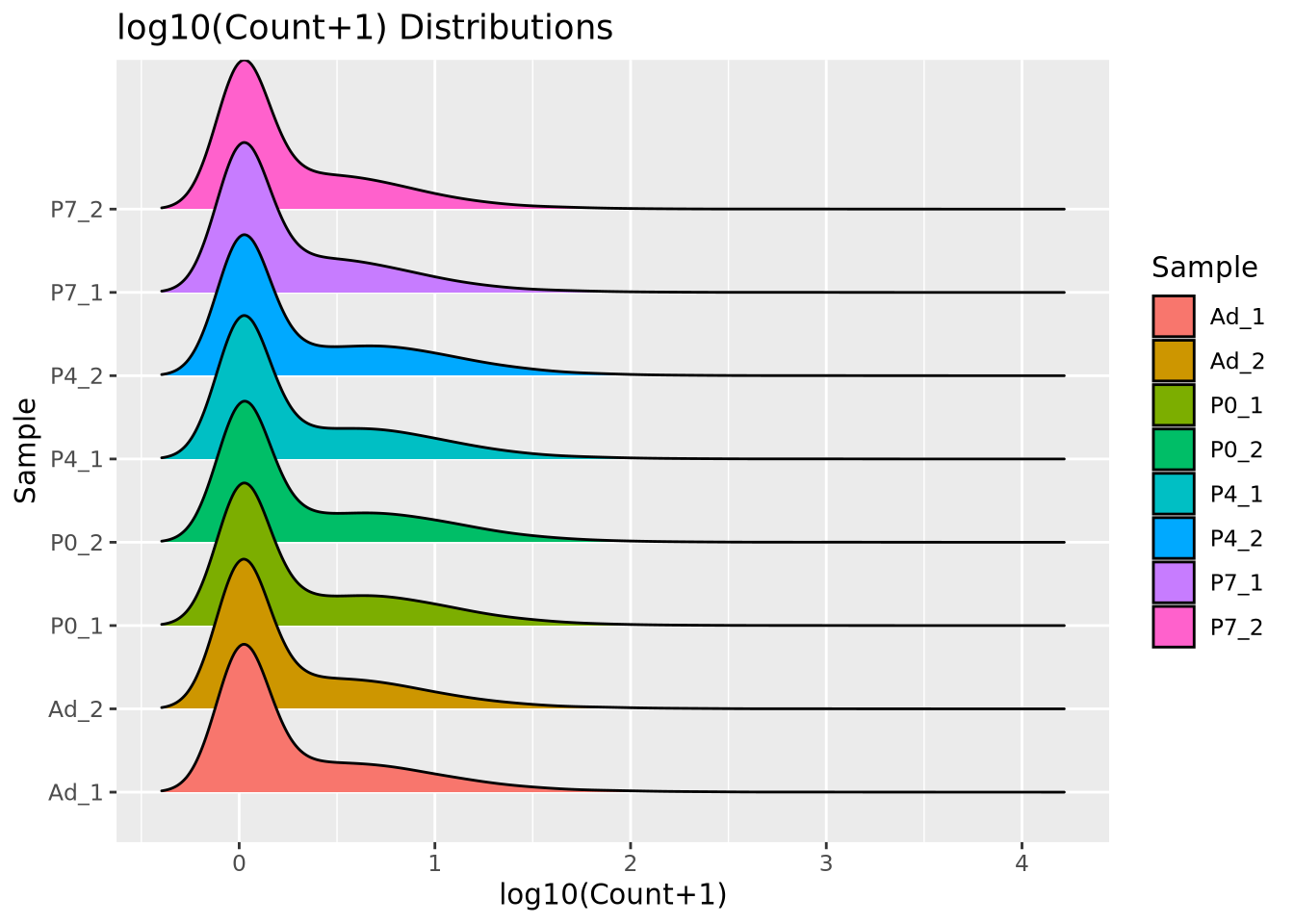

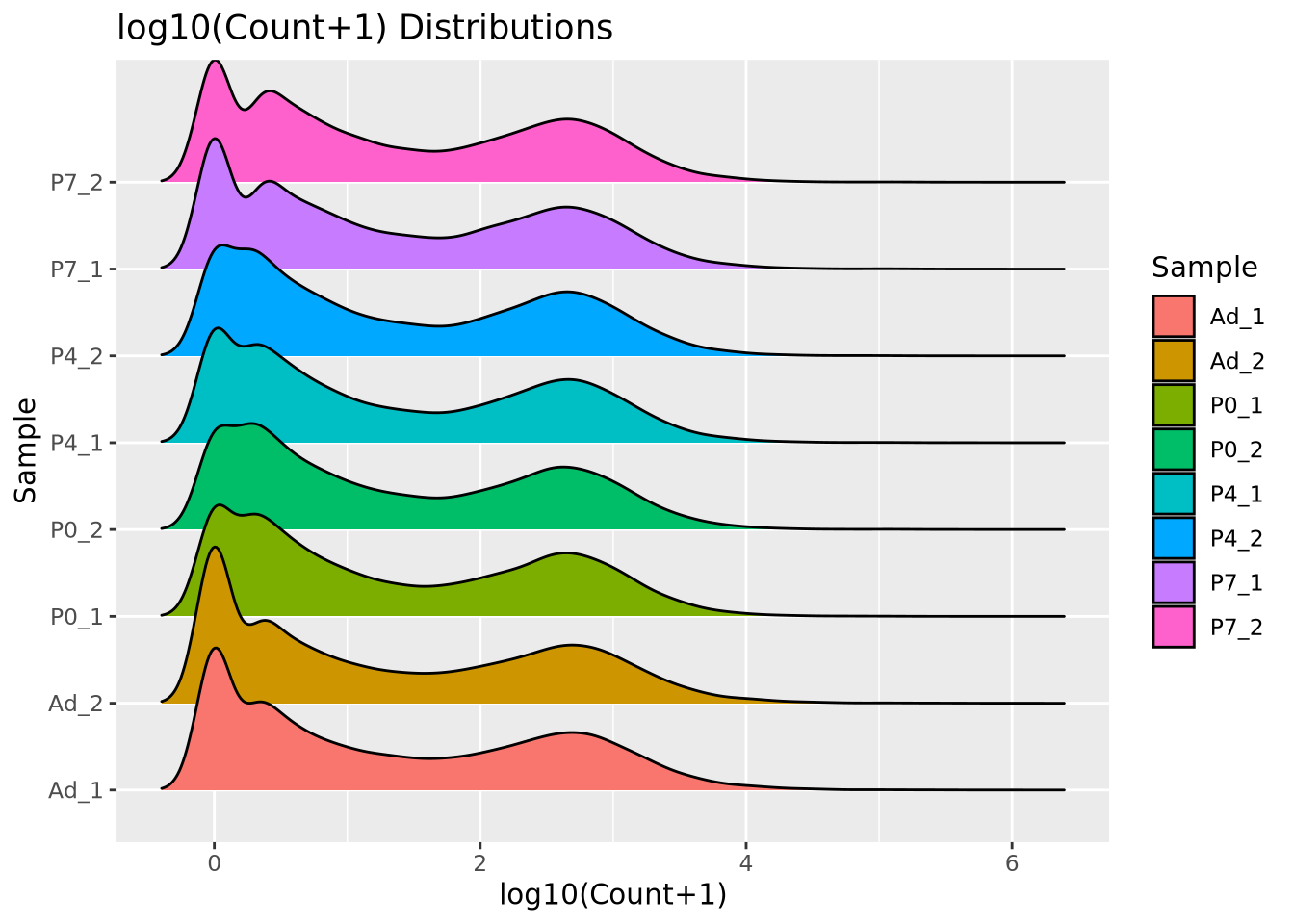

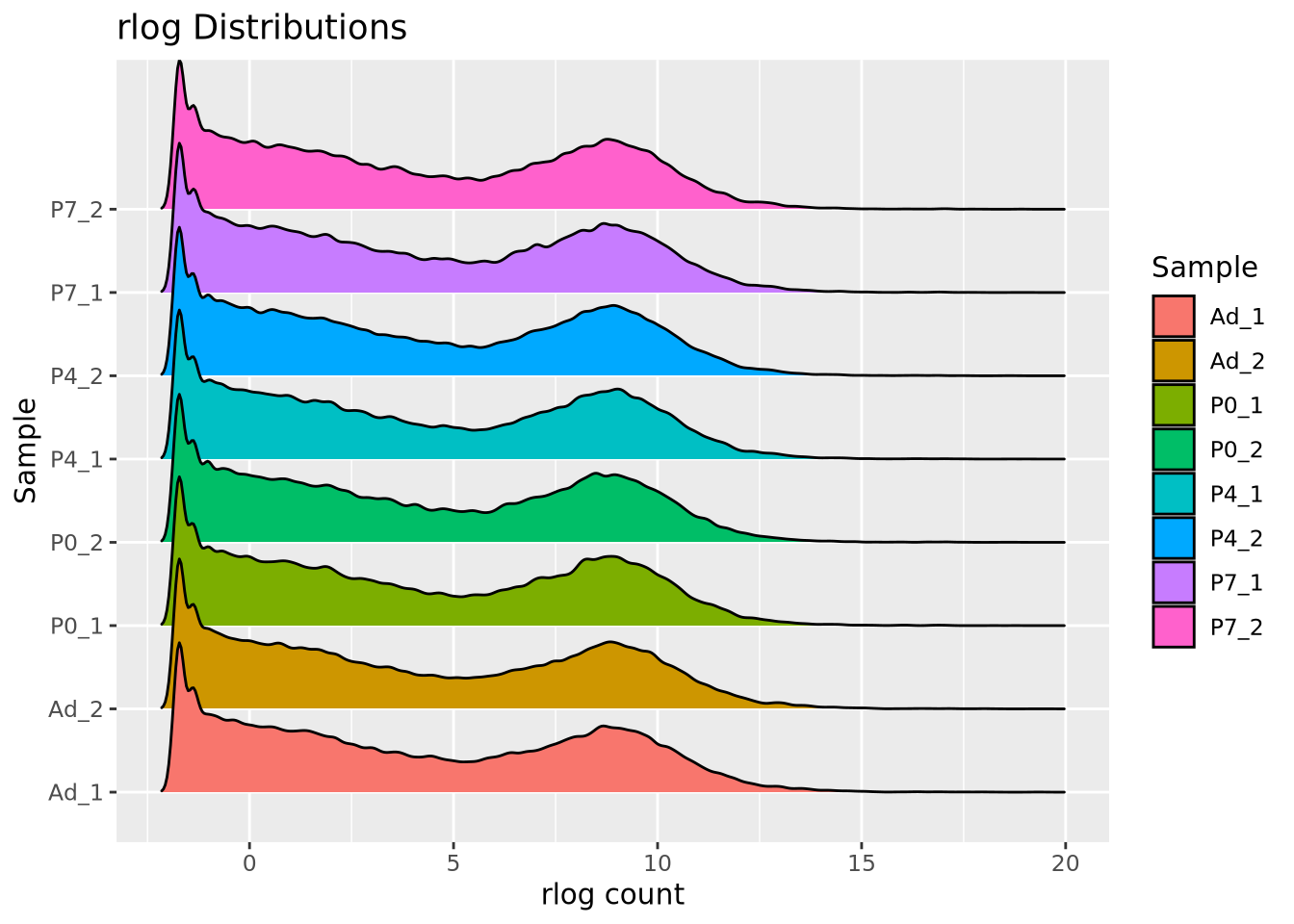

We add 1 count, called a pseudocount, to avoid taking the log of zero, therefore all the genes at \(x=0\) are genes that have no reads. From the plot, the largest count is nearly \(10^6\) while the smallest is 1, spanning nearly six orders of magnitude. There are relatively few of these highly abundant genes, however, and most genes are betwen the range of 100-10,000 reads. We see that this distribution is similar for all the samples, when displayed as a ridgeline plot:

library(ggridges)

pivot_longer(filtered_counts,-c(gene),names_to="Sample",values_to="Count") %>%

mutate(`log10(Count+1)`=log10(Count+1)) %>%

ggplot(aes(y=Sample,x=`log10(Count+1)`,fill=Sample)) +

geom_density_ridges(bandwidth=0.132) +

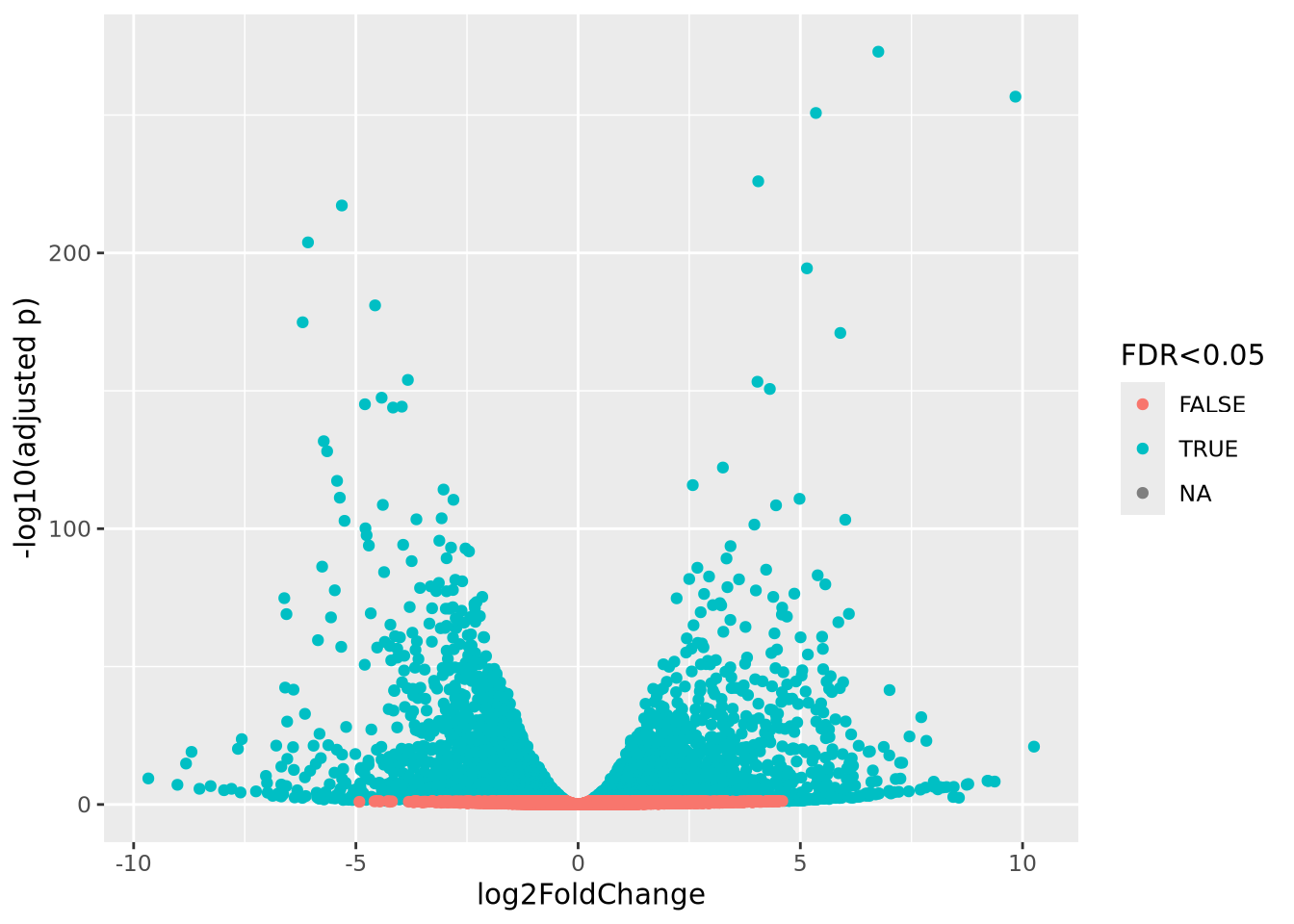

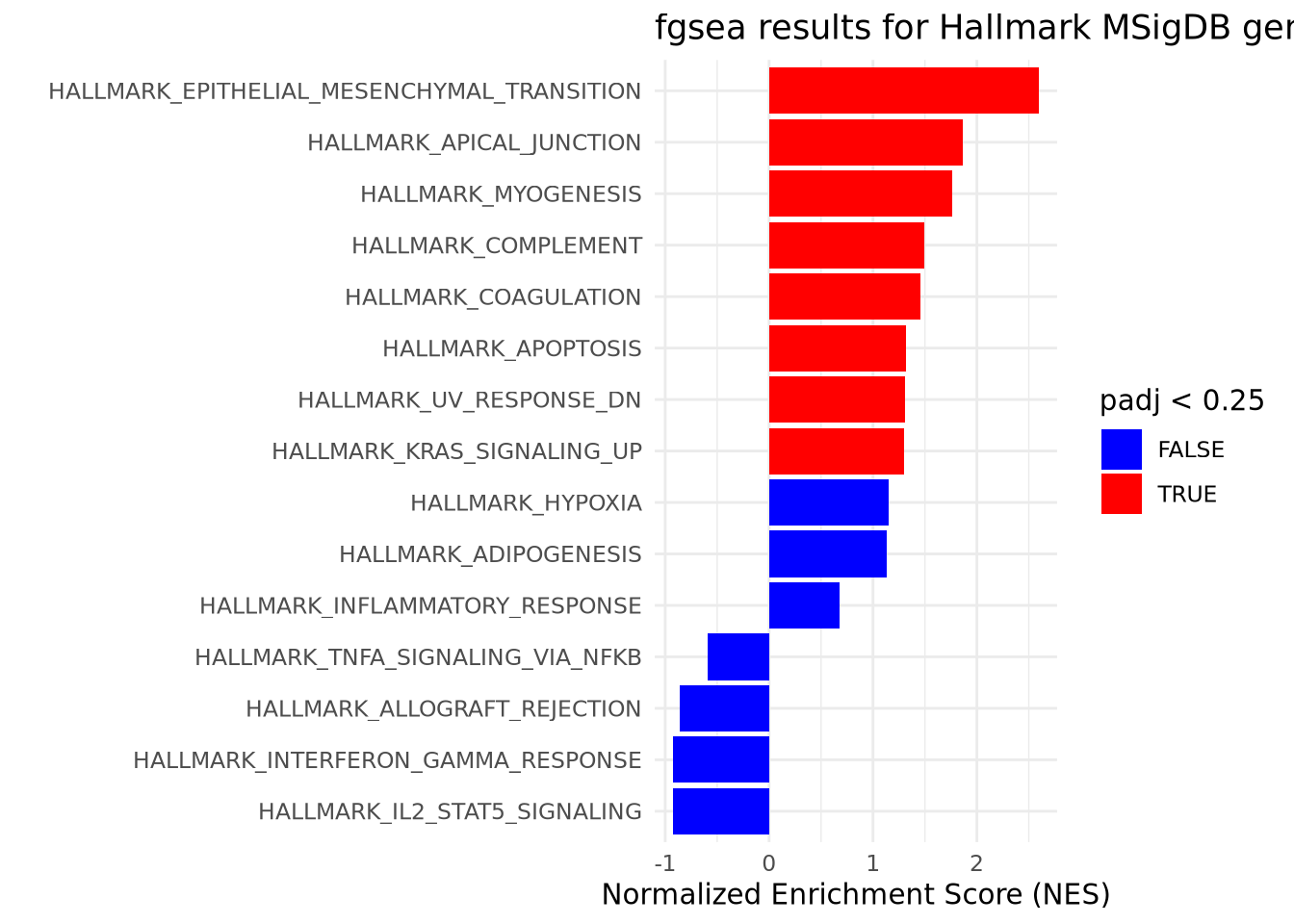

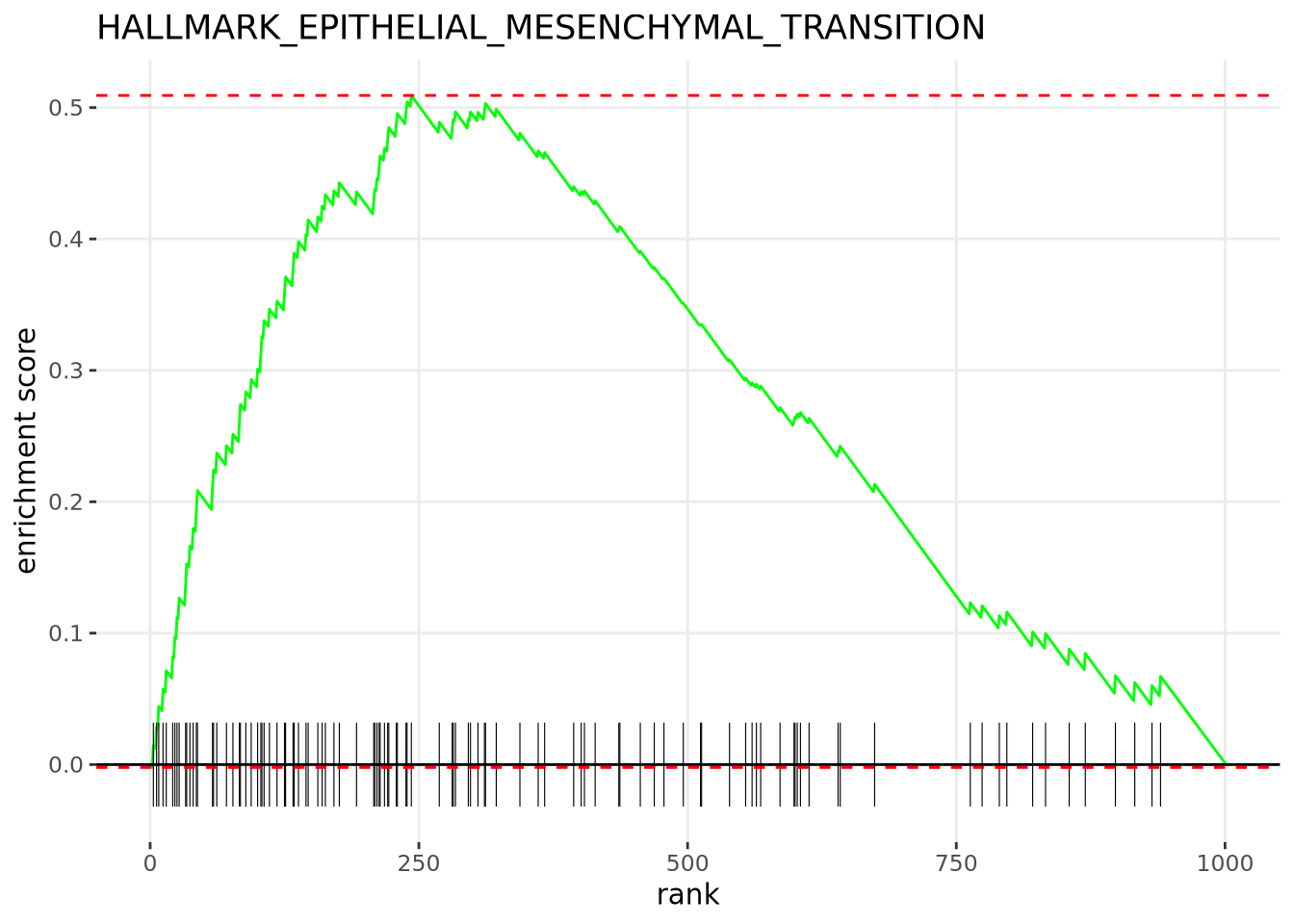

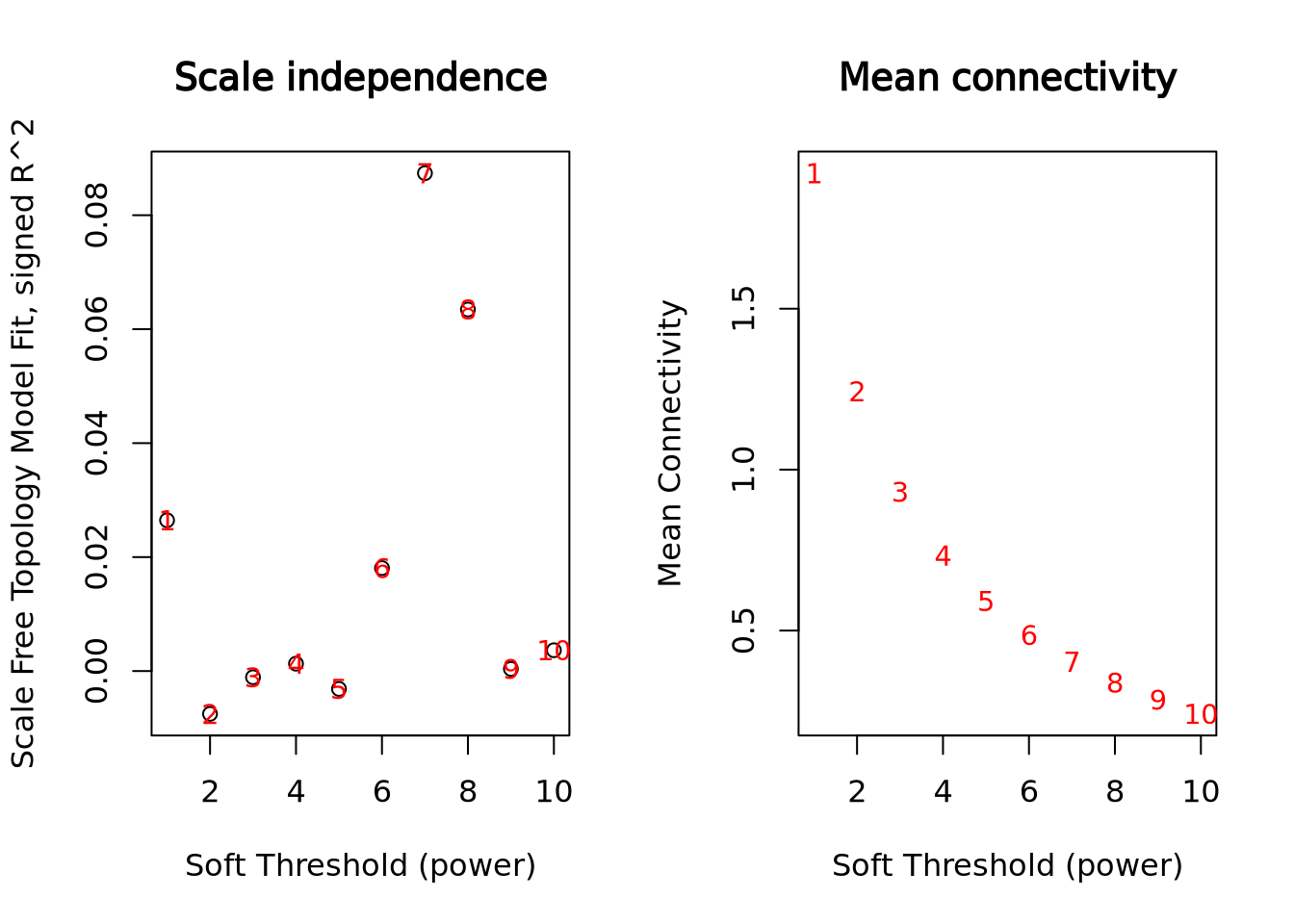

labs(title="log10(Count+1) Distributions")